最新刊期

卷 29 , 期 12 , 2024

-

摘要:Image deblurring is a fundamental task in computer vision that holds significant importance in various applications, such as medical imaging, surveillance cameras, and satellite imagery. Over the years, image deblurring has garnered much research attention, leading to the development of numerous dedicated methods. However, in real-world scenarios, the imaging process may be subject to various disturbances that can lead to complex blurring. Certain factors, such as inconsistent object motion, camera lens defocusing, pixel compression during transmission, and insufficient lighting, can lead to a range of intricate blurring phenomena that further amplify the deblurring challenges. In this case, image deblurring in real-world scenarios becomes a complex ill-posed problem, and conventional image deblurring based on simulated blurry degradations methods often falls short when confronted with these real-world deblurring challenges. These limitations are ascribed to the extent of assumptions on which these conventional methods depend. These assumptions include but are not limited to 1) traditional methods often assume that the noise in the image follows a Gaussian distribution; 2) spatially invariant uniform blur assumption; and 3) independence of the blurring phenomena assumption. Although convenient for theoretical analysis and algorithm development, these assumptions prove to be restrictive when applied to the complex deblurring problems encountered in real-world scenarios. Consequently, there is a pressing need to conduct specialized research tailored to the challenges of real-world image deblurring and to enhance the effectiveness of image restoration methods. Real-world image deblurring is an intricate task that requires the development of innovative algorithms and techniques that are capable of accommodating the diversity of blurring factors and the complexities present in practical environments. This paper attempts to create unique deblurring solutions that can efficiently handle real-world scenarios and enhance the practical applicability of deblurring methods. One approach to addressing the above challenges is to design algorithms that are robust to various types of noise and are capable of handling non-uniform and coupled blurring effects. Additionally, machine learning and deep learning have emerged as powerful tools for addressing complex real-world deblurring problems. Deep learning models, such as convolution neural networks and generative adversarial networks, have shown remarkable adaptability in learning from diverse data and producing high-quality deblurred images. Furthermore, researchers are exploring the integration of multiple sensor inputs, including depth information, to improve deblurring accuracy and effectiveness. These multi-modal approaches leverage additional data sources to disentangle complex blurring effects and enhance deblurring performance. As real-world image deblurring continues to gain attention, the research community is expected to contribute valuable insights and develop innovative solutions to further improve image restoration in complex scenarios. The ongoing collaboration among researchers from different fields, including computer vision, machine learning, optics, and imaging, will likely yield breakthroughs in addressing real-world deblurring challenges. In conclusion, real-world image deblurring is a multifaceted problem that requires tailored solutions to overcome the limitations of conventional deblurring methods. By acknowledging the complexities of real-world blurring phenomena and harnessing the power of advanced algorithms, machine learning, and multi-modal approaches, researchers are working toward enhancing image restoration in practical, challenging environments. Despite the growing interest in real-world deblurring, there remains a dearth of comprehensive surveys on the subject. To bridge this gap, this paper conducts a systematic review of real-world deblurring problems. From the perspective of image degradation models, this paper delves into various aspects and breaks down the associated challenges into isolated blur removal methods, coupled blur removal methods, and methods for unknown blur in real-world scenarios. This paper also provides a holistic overview of the state-of-the-art research in this domain, summarizes and contrasts the strengths and weaknesses of various methods, and elucidates the challenges that hinder further improvements in image restoration performance. This paper also offers insights into the prospects and research trends in real-world deblurring tasks and offers potential solutions to the challenges ahead, including the following: 1) Shortage of paired real-world training data: Acquiring high-quality training data with blur and sharp images that accurately represent the diversity of real-world scenarios is a significant challenge. The scarcity of comprehensive, real-world datasets hinders the development of supervised deblurring tasks. To address the lack of real-world data, researchers are exploring data synthesis and unsupervised learning techniques. By generating synthetic data that simulate real-world scenarios, algorithms can be trained on highly diverse data, and unsupervised learning is particularly suitable for improving adaptability to real-world conditions. 2) Efficiency of complex models: Recent deblurring algorithms rely on complex deep learning models to achieve high-quality results. However, the models often result in computational inefficiency, making these algorithms impractical for real-time or resource-constrained applications. The computational overhead and memory requirements of these models also limit their deployment in practical scenarios. Researchers are developing highly efficient model architectures, such as lightweight neural networks and model compression techniques, to strike a balance between computational efficiency and deblurring performance, making them suitable for real-time applications and resource-constrained environments. 3) Overemphasis on degradation metrics: Many deblurring methods prioritize optimizing quantitative metrics related to the reconstruction of image details. While these metrics provide a quantitative measure of image quality, they may not align with the perceptual quality as perceived by the human visual system. Therefore, a narrow focus on these metrics may neglect the importance of achieving results that are visually realistic and aesthetically pleasing to human observers. There has also been a growing emphasis on perceptual quality metrics, which evaluate the visual quality of deblurred images based on human perception. Integrating these metrics into the evaluation process can help ensure that deblurred images are not only quantitatively accurate but also visually pleasing to humans. As research on real-world image deblurring continues, the above challenges are expected to be gradually addressed, thus leading to effective and practical deblurring solutions. This survey aims to provide a comprehensive understanding of the current landscape of research in real-world deblurring and offers a roadmap for further advancements in this critical area of computer vision.关键词:image deblurring;real-world scenes;non-uniform blur;coupled blur;unknown degradation representation

摘要:Image deblurring is a fundamental task in computer vision that holds significant importance in various applications, such as medical imaging, surveillance cameras, and satellite imagery. Over the years, image deblurring has garnered much research attention, leading to the development of numerous dedicated methods. However, in real-world scenarios, the imaging process may be subject to various disturbances that can lead to complex blurring. Certain factors, such as inconsistent object motion, camera lens defocusing, pixel compression during transmission, and insufficient lighting, can lead to a range of intricate blurring phenomena that further amplify the deblurring challenges. In this case, image deblurring in real-world scenarios becomes a complex ill-posed problem, and conventional image deblurring based on simulated blurry degradations methods often falls short when confronted with these real-world deblurring challenges. These limitations are ascribed to the extent of assumptions on which these conventional methods depend. These assumptions include but are not limited to 1) traditional methods often assume that the noise in the image follows a Gaussian distribution; 2) spatially invariant uniform blur assumption; and 3) independence of the blurring phenomena assumption. Although convenient for theoretical analysis and algorithm development, these assumptions prove to be restrictive when applied to the complex deblurring problems encountered in real-world scenarios. Consequently, there is a pressing need to conduct specialized research tailored to the challenges of real-world image deblurring and to enhance the effectiveness of image restoration methods. Real-world image deblurring is an intricate task that requires the development of innovative algorithms and techniques that are capable of accommodating the diversity of blurring factors and the complexities present in practical environments. This paper attempts to create unique deblurring solutions that can efficiently handle real-world scenarios and enhance the practical applicability of deblurring methods. One approach to addressing the above challenges is to design algorithms that are robust to various types of noise and are capable of handling non-uniform and coupled blurring effects. Additionally, machine learning and deep learning have emerged as powerful tools for addressing complex real-world deblurring problems. Deep learning models, such as convolution neural networks and generative adversarial networks, have shown remarkable adaptability in learning from diverse data and producing high-quality deblurred images. Furthermore, researchers are exploring the integration of multiple sensor inputs, including depth information, to improve deblurring accuracy and effectiveness. These multi-modal approaches leverage additional data sources to disentangle complex blurring effects and enhance deblurring performance. As real-world image deblurring continues to gain attention, the research community is expected to contribute valuable insights and develop innovative solutions to further improve image restoration in complex scenarios. The ongoing collaboration among researchers from different fields, including computer vision, machine learning, optics, and imaging, will likely yield breakthroughs in addressing real-world deblurring challenges. In conclusion, real-world image deblurring is a multifaceted problem that requires tailored solutions to overcome the limitations of conventional deblurring methods. By acknowledging the complexities of real-world blurring phenomena and harnessing the power of advanced algorithms, machine learning, and multi-modal approaches, researchers are working toward enhancing image restoration in practical, challenging environments. Despite the growing interest in real-world deblurring, there remains a dearth of comprehensive surveys on the subject. To bridge this gap, this paper conducts a systematic review of real-world deblurring problems. From the perspective of image degradation models, this paper delves into various aspects and breaks down the associated challenges into isolated blur removal methods, coupled blur removal methods, and methods for unknown blur in real-world scenarios. This paper also provides a holistic overview of the state-of-the-art research in this domain, summarizes and contrasts the strengths and weaknesses of various methods, and elucidates the challenges that hinder further improvements in image restoration performance. This paper also offers insights into the prospects and research trends in real-world deblurring tasks and offers potential solutions to the challenges ahead, including the following: 1) Shortage of paired real-world training data: Acquiring high-quality training data with blur and sharp images that accurately represent the diversity of real-world scenarios is a significant challenge. The scarcity of comprehensive, real-world datasets hinders the development of supervised deblurring tasks. To address the lack of real-world data, researchers are exploring data synthesis and unsupervised learning techniques. By generating synthetic data that simulate real-world scenarios, algorithms can be trained on highly diverse data, and unsupervised learning is particularly suitable for improving adaptability to real-world conditions. 2) Efficiency of complex models: Recent deblurring algorithms rely on complex deep learning models to achieve high-quality results. However, the models often result in computational inefficiency, making these algorithms impractical for real-time or resource-constrained applications. The computational overhead and memory requirements of these models also limit their deployment in practical scenarios. Researchers are developing highly efficient model architectures, such as lightweight neural networks and model compression techniques, to strike a balance between computational efficiency and deblurring performance, making them suitable for real-time applications and resource-constrained environments. 3) Overemphasis on degradation metrics: Many deblurring methods prioritize optimizing quantitative metrics related to the reconstruction of image details. While these metrics provide a quantitative measure of image quality, they may not align with the perceptual quality as perceived by the human visual system. Therefore, a narrow focus on these metrics may neglect the importance of achieving results that are visually realistic and aesthetically pleasing to human observers. There has also been a growing emphasis on perceptual quality metrics, which evaluate the visual quality of deblurred images based on human perception. Integrating these metrics into the evaluation process can help ensure that deblurred images are not only quantitatively accurate but also visually pleasing to humans. As research on real-world image deblurring continues, the above challenges are expected to be gradually addressed, thus leading to effective and practical deblurring solutions. This survey aims to provide a comprehensive understanding of the current landscape of research in real-world deblurring and offers a roadmap for further advancements in this critical area of computer vision.关键词:image deblurring;real-world scenes;non-uniform blur;coupled blur;unknown degradation representation- 21

- |

- 3

- |

- 0

发布时间:2024-12-16 -

摘要:Human pose estimation (HPE) is a prominent area of research in computer vision whose primary goal is to accurately localize annotated keypoints of the human body, such as wrists and eyes. This fundamental task serves as the basis for numerous downstream applications, including human action recognition, human-computer interaction, pedestrian re-identification, video surveillance, and animation generation, among others. Thanks to the powerful nonlinear mapping capabilities offered by convolutional neural networks, HPE has experienced notable advancements in recent years. Despite this progress, HPE remains a challenging task, particularly when facing complex postures, variations in keypoint scales, occlusion, and other factors. Notably, the current heatmap-based methods suffer from severe performance degradation when encountering occlusion, which remains a critical challenge in HPE given that diverse human postures, complex backgrounds, and various occluding objects can all cause performance degradation. To comprehensively delve into the recent advancements in occlusion-aware HPE, this paper not only explores the intricacies of occlusion prediction difficulties but also delves into the reasons behind these challenges. The identified challenges encompass the absence of annotated occluded data. Annotating occluded data is inherently complex and demanding. Most of the prevalent datasets for HPE predominantly focus on visible keypoints, with only a few datasets addressing and annotating occlusion scenarios. This deficiency in annotated occluded data during model training significantly compromises the robustness of models in effectively handling situations that involve a partial or complete obstruction of body keypoints. Feature confusion presents a key challenge for top-down HPE methods, where the reliance on detected bounding boxes extracted from the image leads to the cropping of the target person’s region for keypoint prediction. However, in the presence of occlusion, these detection boxes may include individuals other than the target person, thereby interfering with the accurate prediction of keypoints. This issue is particularly problematic because the high feature similarity between the target person and the interfering individuals prevents the model from distinguishing features effectively, thereby compromising the accuracy of keypoint predictions and emphasizing the need to develop strategies for addressing feature confusion in occluded scenes. Navigating the intricacies of inference becomes particularly challenging in the presence of substantial occlusion. The expansive coverage of occlusion leads to the loss of valuable contextual and structural information that is essential for accurately predicting the occluded keypoints. Contextual cues and structural insights play pivotal roles in the inference process, and their absence impedes the model’s ability to draw precise conclusions. The significant loss of contextual information also hampers the model’s capacity to glean necessary details from adjacent keypoints, which is crucial for making informed predictions about occluded keypoints. This, in turn, results in the potential omission of keypoints or the emergence of anomalous pose estimations. Besides, this paper systematically reviews representative methods since 2018. Based on the training data, model structure, and output results contained in neural networks, this paper categorizes methods into three types, namely, preprocessing based on data augmentation, structural design based on feature discrimination, and result optimization based on human body priors. Preprocessing based on data augmentation techniques, which generate data with occlusion, are employed to augment training samples, compensate for the lack of annotated occluded data, and alleviate the performance degradation of the model in the presence of occlusion. These techniques utilize synthetic methods to introduce occlusive elements and simulate occlusion scenarios observed in real-world settings. Through these techniques, the model is exposed to a diverse set of samples featuring occlusion during the training process, thereby enhancing its robustness in complex environments. This data augmentation strategy aids the model in understanding and adapting to occluded conditions for keypoint prediction. By incorporating diverse occlusion patterns, the model can learn a broad range of scenarios, thus improving its generalization ability in practical applications. This method not only helps enhance the model’s performance in occluded scenes but also provides comprehensive training to boost its adaptability to complex situations. Feature-discrimination-based methods utilize attention mechanisms and similar techniques to reduce interference features. By strengthening features associated with the target person and suppressing those related to non-target individuals, these methods effectively mitigate the interference caused by feature confusion. These methods rely on mechanisms, such as attention, to selectively emphasize relevant features, thereby allowing the model to focus on distinguishing the keypoint features of the target person from those of interfering individuals. By enhancing the discriminative power of features belonging to the target individual, the model becomes adept at navigating scenarios where feature confusion is prevalent. Methods based on human body structure priors optimize occluded poses by leveraging prior knowledge of the human body structure. The use of human body structure priors is particularly effective in providing valuable information about the structural aspects of the human body. These priors serve as constraints that improve the robustness of the model during the inference process. By incorporating these priors, the model is further informed about the expected configuration of body parts, even in the presence of occlusion. This prior knowledge helps guide the model’s predictions and ensures that the estimated poses adhere closely to anatomically plausible configurations. A comparative analysis is also conducted to highlight the strengths and limitations of each method in handling occlusion. This paper also discusses the challenges inherent to occluded pose estimation and offers some directions for future research in this area.关键词:human pose estimation(HPE);occlusion;data augmentation;human structure a priori;insufficient occlusion labeling data

摘要:Human pose estimation (HPE) is a prominent area of research in computer vision whose primary goal is to accurately localize annotated keypoints of the human body, such as wrists and eyes. This fundamental task serves as the basis for numerous downstream applications, including human action recognition, human-computer interaction, pedestrian re-identification, video surveillance, and animation generation, among others. Thanks to the powerful nonlinear mapping capabilities offered by convolutional neural networks, HPE has experienced notable advancements in recent years. Despite this progress, HPE remains a challenging task, particularly when facing complex postures, variations in keypoint scales, occlusion, and other factors. Notably, the current heatmap-based methods suffer from severe performance degradation when encountering occlusion, which remains a critical challenge in HPE given that diverse human postures, complex backgrounds, and various occluding objects can all cause performance degradation. To comprehensively delve into the recent advancements in occlusion-aware HPE, this paper not only explores the intricacies of occlusion prediction difficulties but also delves into the reasons behind these challenges. The identified challenges encompass the absence of annotated occluded data. Annotating occluded data is inherently complex and demanding. Most of the prevalent datasets for HPE predominantly focus on visible keypoints, with only a few datasets addressing and annotating occlusion scenarios. This deficiency in annotated occluded data during model training significantly compromises the robustness of models in effectively handling situations that involve a partial or complete obstruction of body keypoints. Feature confusion presents a key challenge for top-down HPE methods, where the reliance on detected bounding boxes extracted from the image leads to the cropping of the target person’s region for keypoint prediction. However, in the presence of occlusion, these detection boxes may include individuals other than the target person, thereby interfering with the accurate prediction of keypoints. This issue is particularly problematic because the high feature similarity between the target person and the interfering individuals prevents the model from distinguishing features effectively, thereby compromising the accuracy of keypoint predictions and emphasizing the need to develop strategies for addressing feature confusion in occluded scenes. Navigating the intricacies of inference becomes particularly challenging in the presence of substantial occlusion. The expansive coverage of occlusion leads to the loss of valuable contextual and structural information that is essential for accurately predicting the occluded keypoints. Contextual cues and structural insights play pivotal roles in the inference process, and their absence impedes the model’s ability to draw precise conclusions. The significant loss of contextual information also hampers the model’s capacity to glean necessary details from adjacent keypoints, which is crucial for making informed predictions about occluded keypoints. This, in turn, results in the potential omission of keypoints or the emergence of anomalous pose estimations. Besides, this paper systematically reviews representative methods since 2018. Based on the training data, model structure, and output results contained in neural networks, this paper categorizes methods into three types, namely, preprocessing based on data augmentation, structural design based on feature discrimination, and result optimization based on human body priors. Preprocessing based on data augmentation techniques, which generate data with occlusion, are employed to augment training samples, compensate for the lack of annotated occluded data, and alleviate the performance degradation of the model in the presence of occlusion. These techniques utilize synthetic methods to introduce occlusive elements and simulate occlusion scenarios observed in real-world settings. Through these techniques, the model is exposed to a diverse set of samples featuring occlusion during the training process, thereby enhancing its robustness in complex environments. This data augmentation strategy aids the model in understanding and adapting to occluded conditions for keypoint prediction. By incorporating diverse occlusion patterns, the model can learn a broad range of scenarios, thus improving its generalization ability in practical applications. This method not only helps enhance the model’s performance in occluded scenes but also provides comprehensive training to boost its adaptability to complex situations. Feature-discrimination-based methods utilize attention mechanisms and similar techniques to reduce interference features. By strengthening features associated with the target person and suppressing those related to non-target individuals, these methods effectively mitigate the interference caused by feature confusion. These methods rely on mechanisms, such as attention, to selectively emphasize relevant features, thereby allowing the model to focus on distinguishing the keypoint features of the target person from those of interfering individuals. By enhancing the discriminative power of features belonging to the target individual, the model becomes adept at navigating scenarios where feature confusion is prevalent. Methods based on human body structure priors optimize occluded poses by leveraging prior knowledge of the human body structure. The use of human body structure priors is particularly effective in providing valuable information about the structural aspects of the human body. These priors serve as constraints that improve the robustness of the model during the inference process. By incorporating these priors, the model is further informed about the expected configuration of body parts, even in the presence of occlusion. This prior knowledge helps guide the model’s predictions and ensures that the estimated poses adhere closely to anatomically plausible configurations. A comparative analysis is also conducted to highlight the strengths and limitations of each method in handling occlusion. This paper also discusses the challenges inherent to occluded pose estimation and offers some directions for future research in this area.关键词:human pose estimation(HPE);occlusion;data augmentation;human structure a priori;insufficient occlusion labeling data- 8

- |

- 1

- |

- 0

发布时间:2024-12-16 -

摘要:Cross-view geo-localization aims to estimate a target geographical location by matching images from different viewpoints. This method is usually viewed as an image retrieval task that has been widely adopted in various artificial intelligence tasks, such as person re-identification, vehicle re-identification, and image registration. The main challenge of this localization task lies in the drastic changes among different viewpoints, which reduce the retrieval performance of the model. Conventional techniques for cross-view geo-localization rely on manual feature extraction, which restricts precision when determining location. With the development of deep learning techniques, deep learning-based cross-view geo-localization methods have become the current mainstream technology. However, due to the involvement of multiple steps and the extensive transfer of knowledge in cross-view geo-localization tasks, only a few studies have been conducted in this field. In this paper, we present the first review of cross-view geo-localization methods based on deep learning. We analyze the various developments in data preprocessing, deep learning networks, feature attention modules, and loss functions within the context of cross-view geo-localization tasks. To address the challenges in this field, the data preprocessing phase involves feature alignment, sampling strategies, and data augmentation. Feature alignment serves as prior knowledge for cross-view geo-localization that contributes to improving the localization accuracy. The use of GAN networks has emerged as a prominent trend for feature alignment. Additionally, the discrepancy in sample quantities among satellite, ground, and drone images necessitates the use of effective sampling strategies and data augmentation techniques to achieve training balance. Deep learning networks play a critical role in extracting image features, and their performance directly impacts the accuracy of cross-view geo-localization tasks. In general, the methods that use Transformer as the backbone network have a higher accuracy than those that based on ResNet. Meanwhile, those methods that use the ConvNeXt network show the best performance. To further extract image features and enhance the discriminative power of the model, feature attention modules need to be designed. By learning effective attention mechanisms, these modules adaptively weight the input images or feature maps to improve their focus on task-relevant regions or features. Experimental results show that these modules can explore previously unattended feature information, further extract image features, and enhance the discriminative power of the model. Loss functions are used to improve the fit of the model to the data and to accelerate its convergence. Based on their results, these functions guide the training direction of the entire network based, thus enabling the model to learn better representations and further improve the accuracy of cross-view geo-localization tasks. Some of the most commonly used loss functions include contrastive loss and triplet loss. With the improvement in these loss functions, the number of samples extracted by the model evolves from one-to-one to one-to-many, thus allowing the model to cover all samples during training and further enhance its performance. By analyzing nearly a hundred pieces of influential literature, we summarize the characteristics and propose some ideas for improving cross-view geo-localization tasks, which can inspire researchers to design new methods. We also test 10 deep learning-based cross-view geo-localization methods on 2 representative datasets. This evaluation considers the backbone network type and input data size of these methods. In the University-1652 dataset, we evaluate the accuracy metrics R@1 and AP, the model parameters, and the inference speed. In the CVUSA dataset, we mainly evaluate four accuracy metrics, namely, R@1, R@5, R@10, and R@Top1. Experimental results show that a better backbone network and a large image data input size positively affect the performance of the model. Building upon an extensive review of the current state-of-the-art cross-view geo-localization methods, we also discuss the related challenges and provide several directions for further research on cross-view geo-localization.关键词:cross-view;geo-localization;image retrieval;deep learning;attention;drone

摘要:Cross-view geo-localization aims to estimate a target geographical location by matching images from different viewpoints. This method is usually viewed as an image retrieval task that has been widely adopted in various artificial intelligence tasks, such as person re-identification, vehicle re-identification, and image registration. The main challenge of this localization task lies in the drastic changes among different viewpoints, which reduce the retrieval performance of the model. Conventional techniques for cross-view geo-localization rely on manual feature extraction, which restricts precision when determining location. With the development of deep learning techniques, deep learning-based cross-view geo-localization methods have become the current mainstream technology. However, due to the involvement of multiple steps and the extensive transfer of knowledge in cross-view geo-localization tasks, only a few studies have been conducted in this field. In this paper, we present the first review of cross-view geo-localization methods based on deep learning. We analyze the various developments in data preprocessing, deep learning networks, feature attention modules, and loss functions within the context of cross-view geo-localization tasks. To address the challenges in this field, the data preprocessing phase involves feature alignment, sampling strategies, and data augmentation. Feature alignment serves as prior knowledge for cross-view geo-localization that contributes to improving the localization accuracy. The use of GAN networks has emerged as a prominent trend for feature alignment. Additionally, the discrepancy in sample quantities among satellite, ground, and drone images necessitates the use of effective sampling strategies and data augmentation techniques to achieve training balance. Deep learning networks play a critical role in extracting image features, and their performance directly impacts the accuracy of cross-view geo-localization tasks. In general, the methods that use Transformer as the backbone network have a higher accuracy than those that based on ResNet. Meanwhile, those methods that use the ConvNeXt network show the best performance. To further extract image features and enhance the discriminative power of the model, feature attention modules need to be designed. By learning effective attention mechanisms, these modules adaptively weight the input images or feature maps to improve their focus on task-relevant regions or features. Experimental results show that these modules can explore previously unattended feature information, further extract image features, and enhance the discriminative power of the model. Loss functions are used to improve the fit of the model to the data and to accelerate its convergence. Based on their results, these functions guide the training direction of the entire network based, thus enabling the model to learn better representations and further improve the accuracy of cross-view geo-localization tasks. Some of the most commonly used loss functions include contrastive loss and triplet loss. With the improvement in these loss functions, the number of samples extracted by the model evolves from one-to-one to one-to-many, thus allowing the model to cover all samples during training and further enhance its performance. By analyzing nearly a hundred pieces of influential literature, we summarize the characteristics and propose some ideas for improving cross-view geo-localization tasks, which can inspire researchers to design new methods. We also test 10 deep learning-based cross-view geo-localization methods on 2 representative datasets. This evaluation considers the backbone network type and input data size of these methods. In the University-1652 dataset, we evaluate the accuracy metrics R@1 and AP, the model parameters, and the inference speed. In the CVUSA dataset, we mainly evaluate four accuracy metrics, namely, R@1, R@5, R@10, and R@Top1. Experimental results show that a better backbone network and a large image data input size positively affect the performance of the model. Building upon an extensive review of the current state-of-the-art cross-view geo-localization methods, we also discuss the related challenges and provide several directions for further research on cross-view geo-localization.关键词:cross-view;geo-localization;image retrieval;deep learning;attention;drone- 5

- |

- 1

- |

- 0

发布时间:2024-12-16

Review

-

摘要:ObjectiveInformational warfare has put forward higher requirements for military reconnaissance, and military target identification, as one of the main tasks of military reconnaissance, needs to be able to deal with fine-grained military targets and provide personnel with more detailed target information. Optical remote sensing image datasets play a crucial role in remote sensing target detection tasks. These datasets provide valuable standard remote sensing data for model training and objective and uniform benchmarks for the comparison of different networks and algorithms. However, the current lack of high-quality fine-grained military target remote sensing image datasets constrains research on the automatic and accurate detection of military targets. As a special remote sensing target, military vehicles have certain characteristics, such as environmental camouflage, shape and structural changes, and movement “color shadows” that make their detection particularly challenging. Fig. 1 shows the challenges posed by the fine-grained target characteristics of military vehicles in optical remote sensing images, which can be categorized into the following types according to the source of target characteristics: 1) target characterization as affected by satellite remote sensing imaging systems; 2) characterization of the vehicle target itself; 3) military vehicle target characterization; 4) characteristics affected by the combination; and 5) properties of fine-grained classification. To promote the development of deep-learning-based research on the fine-grained accurate detection of military vehicles in high-resolution remote sensing images, we construct a new high-resolution optical remote sensing image dataset called military vehicle remote sensing dataset (MVRSD). Using this model, we design an improved model based on YOLOv5s to improve the target detection performance for military vehicles.MethodWe construct our dataset using Google Earth data, collected 3 000 remotely sensed images from more than 40 military scenarios within Asia, North America, and Europe, and acquired 32 626 military vehicle targets from these images. These images have a spatial resolution of 0.3 m and size of 640 × 640 pixels. Our dataset consists of remotely sensed images and the corresponding labeled files, and the targets were manually selected and classified by experts through the interpretation of high-resolution optical images. We divide the granular categories in the dataset into the following categories based on vehicle size and military function: small military vehicles (SMV), large military vehicles (LMV), armored fighting vehicles (AFV), military construction vehicles (MCV), and civilian vehicles (CV). The geographic environments of the samples include cities, plains, mountains, and deserts. To solve the difficulty of recognizing military vehicles in remote sensing images, the proposed benchmark model takes into account the characteristics of those military vehicles with small targets and fuzzy shapes and appearances along with interclass similarity and intraclass variability. The number of instances of each category in the dataset and the number of instances of each image depend on their actual distribution in the remote sensing scene, which can reflect the realism and challenges of the dataset. We then design a cross-scale target-size-based detection head and context aggregation module based on YOLOv5s to improve the detection performance for fine-grained military vehicle targets.ResultWe analyze the characteristics of military vehicle targets in remote sensing images and the challenges being faced in the fine-grained detection of these vehicles. To address the poor detection accuracy of the YOLOv5 algorithm for small targets and reduce its risks of omission and misdetection, we design an improved model based on YOLOv5s as our baseline model and select a cross-scale detector head based on the dimensions of the targets in the dataset to efficiently detect the targets at different scales. We insert the attention mechanism module in front of this detector head to inhibit the interference of complex backgrounds on the target. Based on this dataset, we applied five target detection models for our experiments. Results of our experiments show that our proposed benchmark model improves its mean average precision by 1.1% compared with the classical target detection model. Moreover, the deep learning model achieves good performance in the fine-grained accurate detection of military vehicles.ConclusionThe MVRSD dataset can support researchers in analyzing the features of remote sensing images of military vehicles from different countries and provide training and test data for deep learning methods. The proposed benchmark model can also effectively improve the detection accuracy for remotely sensed military vehicles. The MVRSD dataset is available at https://github.com/baidongls/MVRSD.关键词:target detection;military vehicle dataset;high-resolution remote sensing;fine-grained;deep learning

摘要:ObjectiveInformational warfare has put forward higher requirements for military reconnaissance, and military target identification, as one of the main tasks of military reconnaissance, needs to be able to deal with fine-grained military targets and provide personnel with more detailed target information. Optical remote sensing image datasets play a crucial role in remote sensing target detection tasks. These datasets provide valuable standard remote sensing data for model training and objective and uniform benchmarks for the comparison of different networks and algorithms. However, the current lack of high-quality fine-grained military target remote sensing image datasets constrains research on the automatic and accurate detection of military targets. As a special remote sensing target, military vehicles have certain characteristics, such as environmental camouflage, shape and structural changes, and movement “color shadows” that make their detection particularly challenging. Fig. 1 shows the challenges posed by the fine-grained target characteristics of military vehicles in optical remote sensing images, which can be categorized into the following types according to the source of target characteristics: 1) target characterization as affected by satellite remote sensing imaging systems; 2) characterization of the vehicle target itself; 3) military vehicle target characterization; 4) characteristics affected by the combination; and 5) properties of fine-grained classification. To promote the development of deep-learning-based research on the fine-grained accurate detection of military vehicles in high-resolution remote sensing images, we construct a new high-resolution optical remote sensing image dataset called military vehicle remote sensing dataset (MVRSD). Using this model, we design an improved model based on YOLOv5s to improve the target detection performance for military vehicles.MethodWe construct our dataset using Google Earth data, collected 3 000 remotely sensed images from more than 40 military scenarios within Asia, North America, and Europe, and acquired 32 626 military vehicle targets from these images. These images have a spatial resolution of 0.3 m and size of 640 × 640 pixels. Our dataset consists of remotely sensed images and the corresponding labeled files, and the targets were manually selected and classified by experts through the interpretation of high-resolution optical images. We divide the granular categories in the dataset into the following categories based on vehicle size and military function: small military vehicles (SMV), large military vehicles (LMV), armored fighting vehicles (AFV), military construction vehicles (MCV), and civilian vehicles (CV). The geographic environments of the samples include cities, plains, mountains, and deserts. To solve the difficulty of recognizing military vehicles in remote sensing images, the proposed benchmark model takes into account the characteristics of those military vehicles with small targets and fuzzy shapes and appearances along with interclass similarity and intraclass variability. The number of instances of each category in the dataset and the number of instances of each image depend on their actual distribution in the remote sensing scene, which can reflect the realism and challenges of the dataset. We then design a cross-scale target-size-based detection head and context aggregation module based on YOLOv5s to improve the detection performance for fine-grained military vehicle targets.ResultWe analyze the characteristics of military vehicle targets in remote sensing images and the challenges being faced in the fine-grained detection of these vehicles. To address the poor detection accuracy of the YOLOv5 algorithm for small targets and reduce its risks of omission and misdetection, we design an improved model based on YOLOv5s as our baseline model and select a cross-scale detector head based on the dimensions of the targets in the dataset to efficiently detect the targets at different scales. We insert the attention mechanism module in front of this detector head to inhibit the interference of complex backgrounds on the target. Based on this dataset, we applied five target detection models for our experiments. Results of our experiments show that our proposed benchmark model improves its mean average precision by 1.1% compared with the classical target detection model. Moreover, the deep learning model achieves good performance in the fine-grained accurate detection of military vehicles.ConclusionThe MVRSD dataset can support researchers in analyzing the features of remote sensing images of military vehicles from different countries and provide training and test data for deep learning methods. The proposed benchmark model can also effectively improve the detection accuracy for remotely sensed military vehicles. The MVRSD dataset is available at https://github.com/baidongls/MVRSD.关键词:target detection;military vehicle dataset;high-resolution remote sensing;fine-grained;deep learning- 4

- |

- 0

- |

- 0

发布时间:2024-12-16

Dataset

-

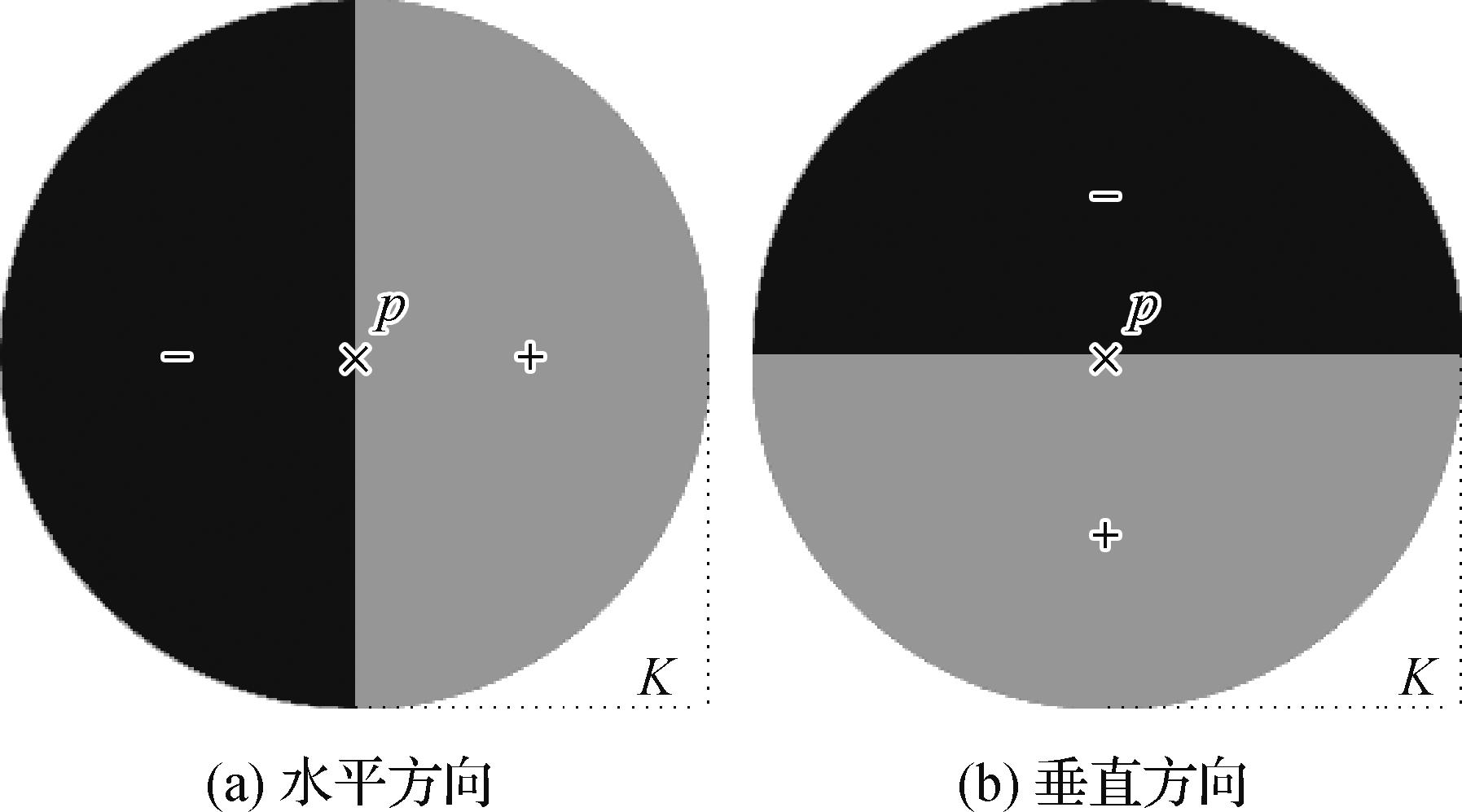

摘要:ObjectiveTexture shows different characteristics on different scales. On a smaller scale, the texture may appear more intricate and detailed, but on a larger scale, texture may present large structures and patterns. Therefore, texture patterns are complex and diverse and show various characteristics across patterns. For example, structural texture has clear geometric shape and arrangement, natural texture has randomness and complexity, and abstract texture presents a combination of different colors, lines, and patterns. While the human visual system can effectively distinguish an ordered structure from a disordered one, computers are generally unable to do so. Texture filtering is a basic and important tool in the fields of computer vision and computer graphics whose main purpose is to filter out unnecessary texture details and maintain the stability of the core structure. The mainstream texture filtering methods are mainly divided into local- and global-based methods. However, the existing texture filtering methods do not effectively guarantee the structural stability while filtering the texture. To address this problem, we propose an adaptive regularization of the weighted relative total variation for image smoothing algorithm.MethodThe main idea of this algorithm is to obtain a structure measure amplitude image with high texture structure discrimination and then use the relative total variation model to smooth this image according to the difference between the texture and structure. Our method implements texture filtering and structure preservation in three steps. First, we propose a multi-scale interval circular gradient operator that can effectively distinguish texture from structure. By inputting the intensity change information of the interval gradient in the horizontal and vertical directions (captured by the interval circular gradient operator) into the frame of directional anisotropic structure measurement (DASM), we generate a structure measure amplitude image with high contrast. In each iteration, we constantly adjust the scale radius of the interval circular gradient operator, where the scale radius of the interval circular gradient operator decreases as the number of iterations increases. On the one hand, this approach can capture the low-level semantic information of the texture structure in a large range at the initial stage of iteration and suppress the texture effectively. On the other hand, this approach can accurately capture the advanced semantic information of the texture structure at the end of the iteration to keep the structure stable. Second, given the high accuracy of the Gaussian mixture model in data classification, we separate the texture and structure layers of the structure measure amplitude image by using this model along with the EM algorithm. Before the separation operation, we conduct a morphological erosion operation on the image to refine the structure edge and shrink the structure area so as to improve the accuracy of the separation result. Finally, we adaptively assign regularization weights according to the structure measure amplitude image and the texture structure separation image. We assign a regularization term with high weight to the texture region for texture suppression, and we allocate a regularization term with a small weight in the structure area to maintain the stability of the fine structure and to ensure that the texture is filtered out in a large area to the greatest extent while maintaining the integrity of the structure.ResultWe ran our experiment on the Windows platform and implement our algorithm using Opencv and MATLAB. We defined three main parameters, including the maximum scale radius of the multi-scale interval circular gradient operator, the regular term of the texture region, and the regular term of the structure region. Maximum scale radius controls how much texture is suppressed. A larger regular term of the texture region corresponds to smoother filtering results, while a smaller regular term of the structure region corresponds to a better structure retention ability. On the visual level, by testing the images of oil paintings, cross embroideries, graffiti, murals, and natural scenes and comparing with the existing mainstream texture filtering methods, our proposed algorithm not only effectively suppresses the strong gradient texture but also maintains the stability of the edge of the weak gradient structure. In terms of quantitative measurement, by removing compressed traces of JPG images and smoothing Gaussian noise images, our proposed algorithm obtains the maximum peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM) compared with the relative total variation, rolling guidance filtering, bilateral texture filtering, scale-aware texture filtering, and

摘要:ObjectiveTexture shows different characteristics on different scales. On a smaller scale, the texture may appear more intricate and detailed, but on a larger scale, texture may present large structures and patterns. Therefore, texture patterns are complex and diverse and show various characteristics across patterns. For example, structural texture has clear geometric shape and arrangement, natural texture has randomness and complexity, and abstract texture presents a combination of different colors, lines, and patterns. While the human visual system can effectively distinguish an ordered structure from a disordered one, computers are generally unable to do so. Texture filtering is a basic and important tool in the fields of computer vision and computer graphics whose main purpose is to filter out unnecessary texture details and maintain the stability of the core structure. The mainstream texture filtering methods are mainly divided into local- and global-based methods. However, the existing texture filtering methods do not effectively guarantee the structural stability while filtering the texture. To address this problem, we propose an adaptive regularization of the weighted relative total variation for image smoothing algorithm.MethodThe main idea of this algorithm is to obtain a structure measure amplitude image with high texture structure discrimination and then use the relative total variation model to smooth this image according to the difference between the texture and structure. Our method implements texture filtering and structure preservation in three steps. First, we propose a multi-scale interval circular gradient operator that can effectively distinguish texture from structure. By inputting the intensity change information of the interval gradient in the horizontal and vertical directions (captured by the interval circular gradient operator) into the frame of directional anisotropic structure measurement (DASM), we generate a structure measure amplitude image with high contrast. In each iteration, we constantly adjust the scale radius of the interval circular gradient operator, where the scale radius of the interval circular gradient operator decreases as the number of iterations increases. On the one hand, this approach can capture the low-level semantic information of the texture structure in a large range at the initial stage of iteration and suppress the texture effectively. On the other hand, this approach can accurately capture the advanced semantic information of the texture structure at the end of the iteration to keep the structure stable. Second, given the high accuracy of the Gaussian mixture model in data classification, we separate the texture and structure layers of the structure measure amplitude image by using this model along with the EM algorithm. Before the separation operation, we conduct a morphological erosion operation on the image to refine the structure edge and shrink the structure area so as to improve the accuracy of the separation result. Finally, we adaptively assign regularization weights according to the structure measure amplitude image and the texture structure separation image. We assign a regularization term with high weight to the texture region for texture suppression, and we allocate a regularization term with a small weight in the structure area to maintain the stability of the fine structure and to ensure that the texture is filtered out in a large area to the greatest extent while maintaining the integrity of the structure.ResultWe ran our experiment on the Windows platform and implement our algorithm using Opencv and MATLAB. We defined three main parameters, including the maximum scale radius of the multi-scale interval circular gradient operator, the regular term of the texture region, and the regular term of the structure region. Maximum scale radius controls how much texture is suppressed. A larger regular term of the texture region corresponds to smoother filtering results, while a smaller regular term of the structure region corresponds to a better structure retention ability. On the visual level, by testing the images of oil paintings, cross embroideries, graffiti, murals, and natural scenes and comparing with the existing mainstream texture filtering methods, our proposed algorithm not only effectively suppresses the strong gradient texture but also maintains the stability of the edge of the weak gradient structure. In terms of quantitative measurement, by removing compressed traces of JPG images and smoothing Gaussian noise images, our proposed algorithm obtains the maximum peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM) compared with the relative total variation, rolling guidance filtering, bilateral texture filtering, scale-aware texture filtering, and- 8

- |

- 15

- |

- 0

发布时间:2024-12-16 -

摘要:ObjectiveIn recent years, with the development of the internet and computer technology, manipulating images and changing their content have become trivial tasks. Therefore, robust image tampering detection methods need to be developed. As passive forensic methods, image forgery methods can be categorized into copy-move, splicing, and inpainting methods. Copy-move involves copying part of the original image to another part of the same image. Many excellent copy-move forgery detection (CMFD) methods have been developed in recent years and can be categorized into block-based, keypoint-based, and deep learning methods. However, these methods have the following drawbacks: 1) they cannot easily detect small or smooth tampered regions; 2) a massive number of features leads to a high computational cost; and 3) false alarm rates are high when the tampered images involve self-similar regions. To solve these issues, a novel CMFD method based on matched pairs, namely, density-based spatial clustering of applications with noise (MP-DBSCAN), is proposed in this paper along with point density filtering.MethodFirst, a large number of scale-invariant feature transform (SIFT) keypoints are extracted from the input image by lowering the contrast threshold and normalizing the image scale, thus allowing the detection of a sufficient number of keypoints in small and smooth regions. Second, the generalized two nearest neighbor (G2NN) matching strategy is employed to manage multiple keypoint matching, thus allowing the detection algorithm to perform smoothly even when the tampered region has been copied multiple times. A hierarchical matching strategy is then adopted to solve keypoint matching problems involving a massive number of keypoints. To accelerate the matching process, keypoints are initially grouped by their grayscale values, and then the G2NN matching strategy is applied to each group instead of the keypoints detected from the entire image. The efficiency and accuracy of the matching procedure can be improved without deleting the correct matched pairs. Third, an improved clustering algorithm called MP-DBSCAN is proposed. The matched pairs are grouped into different tampered regions, and the direction of the matched pairs are adjusted before the clustering process. The cluster objects only represent one side of the matched pairs and not all the extracted keypoints, and the keypoints from the other side are used as constraints in the clustering process. A satisfying detection result is obtained even when the tampered regions are close to one another. The proposed method obtains better F1 measures compared with the state-of-the-art copy-move forgery detection methods. Fourth, the prior regions are constructed based on the clustering results. These prior regions can be regarded as the approximate tampered regions. A point density filtering policy is also proposed, where each point density of the region is calculated and the region with the lowest point density is deleted to reduce the false alarm rate. Finally, the tampered regions are located accurately using the affine transforms and the zero-mean normalized cross-correlation (ZNCC) algorithm.ResultThe proposed method is compared with the state-of-the-art CMFD methods on four standard datasets, including FAU, MICC-F600, GRIP, and CASIA v2.0. Provided by Christlein, the FAU dataset has an average resolution of about 3 000 × 2 300 pixels and includes tampered images under post-processing operations (e.g., additional noise and JPEG compression) and various geometrical attacks (e.g., scaling and rotation). This dataset involves 48, 480, 384, 432, and 240 plain copy-move, scaling, rotation, JPEG, and noise addition operations, respectively. The MICC-F600 dataset includes images in which a region is duplicated at least once. The resolutions of these images range from 800 × 533 to 3 888 × 2 592 pixels. Among the 600 images in this dataset, 440 are original images and 160 are forged images. The GRIP dataset includes 80 original images and 80 tampered images with a low resolution of 1 024 × 768 pixels. Some tampered regions on these images are very smooth or small. The size of the tampered regions ranges from about 4 000 to 50 000 pixels. The CASIA v2.0 dataset contains 7 491 authentic and 5 123 forged images, of which 1 313 images are forged using copy-move methods. Precision, recall, and F1 scores are used as assessment criteria in the experiments. The F1 scores of the proposed method on the FAU, MICC-F600, GRIP, and CASIA v2.0 datasets at the pixel level are 0.914 3, 0.890 6, 0.939 1, and 0.856 8, respectively. Extensive experimental results demonstrate the superior performance of the proposed method compared with the existing state-of-the-art methods. The effectiveness of the MP-DBSCAN algorithm and the point density filtering policy is also demonstrated via ablation studies.ConclusionTo detect tampered regions accurately, a novel CMFD method based on the MP-DBSCAN algorithm and the point density filtering policy is proposed in this paper. The matched pairs of an image can be divided into different tampered regions by using the MP-DBSCAN algorithm to detect these regions accurately. The mismatched pairs are then discarded by the point density filtering policy to reduce false alarm rates. Extensive experimental results demonstrate that the proposed method exhibits a satisfactory accuracy and robustness compared with the existing state-of-the-art methods.关键词:multimedia forensics;image forensics;image forgery detection;copy-move forgery;density based spatial clustering of applications with noise (DBSCAN)

摘要:ObjectiveIn recent years, with the development of the internet and computer technology, manipulating images and changing their content have become trivial tasks. Therefore, robust image tampering detection methods need to be developed. As passive forensic methods, image forgery methods can be categorized into copy-move, splicing, and inpainting methods. Copy-move involves copying part of the original image to another part of the same image. Many excellent copy-move forgery detection (CMFD) methods have been developed in recent years and can be categorized into block-based, keypoint-based, and deep learning methods. However, these methods have the following drawbacks: 1) they cannot easily detect small or smooth tampered regions; 2) a massive number of features leads to a high computational cost; and 3) false alarm rates are high when the tampered images involve self-similar regions. To solve these issues, a novel CMFD method based on matched pairs, namely, density-based spatial clustering of applications with noise (MP-DBSCAN), is proposed in this paper along with point density filtering.MethodFirst, a large number of scale-invariant feature transform (SIFT) keypoints are extracted from the input image by lowering the contrast threshold and normalizing the image scale, thus allowing the detection of a sufficient number of keypoints in small and smooth regions. Second, the generalized two nearest neighbor (G2NN) matching strategy is employed to manage multiple keypoint matching, thus allowing the detection algorithm to perform smoothly even when the tampered region has been copied multiple times. A hierarchical matching strategy is then adopted to solve keypoint matching problems involving a massive number of keypoints. To accelerate the matching process, keypoints are initially grouped by their grayscale values, and then the G2NN matching strategy is applied to each group instead of the keypoints detected from the entire image. The efficiency and accuracy of the matching procedure can be improved without deleting the correct matched pairs. Third, an improved clustering algorithm called MP-DBSCAN is proposed. The matched pairs are grouped into different tampered regions, and the direction of the matched pairs are adjusted before the clustering process. The cluster objects only represent one side of the matched pairs and not all the extracted keypoints, and the keypoints from the other side are used as constraints in the clustering process. A satisfying detection result is obtained even when the tampered regions are close to one another. The proposed method obtains better F1 measures compared with the state-of-the-art copy-move forgery detection methods. Fourth, the prior regions are constructed based on the clustering results. These prior regions can be regarded as the approximate tampered regions. A point density filtering policy is also proposed, where each point density of the region is calculated and the region with the lowest point density is deleted to reduce the false alarm rate. Finally, the tampered regions are located accurately using the affine transforms and the zero-mean normalized cross-correlation (ZNCC) algorithm.ResultThe proposed method is compared with the state-of-the-art CMFD methods on four standard datasets, including FAU, MICC-F600, GRIP, and CASIA v2.0. Provided by Christlein, the FAU dataset has an average resolution of about 3 000 × 2 300 pixels and includes tampered images under post-processing operations (e.g., additional noise and JPEG compression) and various geometrical attacks (e.g., scaling and rotation). This dataset involves 48, 480, 384, 432, and 240 plain copy-move, scaling, rotation, JPEG, and noise addition operations, respectively. The MICC-F600 dataset includes images in which a region is duplicated at least once. The resolutions of these images range from 800 × 533 to 3 888 × 2 592 pixels. Among the 600 images in this dataset, 440 are original images and 160 are forged images. The GRIP dataset includes 80 original images and 80 tampered images with a low resolution of 1 024 × 768 pixels. Some tampered regions on these images are very smooth or small. The size of the tampered regions ranges from about 4 000 to 50 000 pixels. The CASIA v2.0 dataset contains 7 491 authentic and 5 123 forged images, of which 1 313 images are forged using copy-move methods. Precision, recall, and F1 scores are used as assessment criteria in the experiments. The F1 scores of the proposed method on the FAU, MICC-F600, GRIP, and CASIA v2.0 datasets at the pixel level are 0.914 3, 0.890 6, 0.939 1, and 0.856 8, respectively. Extensive experimental results demonstrate the superior performance of the proposed method compared with the existing state-of-the-art methods. The effectiveness of the MP-DBSCAN algorithm and the point density filtering policy is also demonstrated via ablation studies.ConclusionTo detect tampered regions accurately, a novel CMFD method based on the MP-DBSCAN algorithm and the point density filtering policy is proposed in this paper. The matched pairs of an image can be divided into different tampered regions by using the MP-DBSCAN algorithm to detect these regions accurately. The mismatched pairs are then discarded by the point density filtering policy to reduce false alarm rates. Extensive experimental results demonstrate that the proposed method exhibits a satisfactory accuracy and robustness compared with the existing state-of-the-art methods.关键词:multimedia forensics;image forensics;image forgery detection;copy-move forgery;density based spatial clustering of applications with noise (DBSCAN)- 8

- |

- 3

- |

- 0

发布时间:2024-12-16 -

摘要:ObjectivePoint clouds captured by depth sensors or generated by reconstruction algorithms are essential for various 3D vision tasks, including 3D scene understanding, scan registration, and 3D reconstruction. However, a simple scene or object contains massive amounts of unstructured points, leading to challenges in the storage and transmission of these point cloud data. Therefore, developing point cloud geometry compression algorithms is important to effectively handle and process point cloud data. Existing point cloud compression algorithms typically involve converting point clouds into a storage-efficient data structure, such as an octree representation or sparse points with latent features. These intermediate representations are then encoded as a compact bitstream by using either handcrafted or learning-based entropy coders. Although the correlation of spatial points effectively improves compression performance, existing algorithms may not fully exploit these points as representations of the object surface and topology. Recent studies have addressed this problem by exploring implicit representations and neural networks for surface reconstruction. However, these methods are primarily tailored for 3D objects represented as occupancy fields and signed distance fields, thus limiting their applicability to point clouds and non-watertight meshes in terms of surface representation and reconstruction. Furthermore, the neural networks used in these approaches often rely on simple multi-layer perceptron structures, which may lack capacity and compression efficiency for point cloud geometry compression tasks.MethodTo deal with these limitations, we proposed a novel point cloud geometry compression framework, including a signed logarithmic distance field, an implicit network structure with the multiplicative branches, and an adaptive marching cube algorithm for surface extraction. First, the point cloud surface (serving as the zero level-set) maps the arbitrary points in space to the distance values of their nearest points on the point cloud surface. We design an implicit representation called signed logarithmic distance field (SLDF), which utilizes the thickness assumption and logarithmic parameterization to fit arbitrary point cloud surfaces. Afterward, we apply a multiplicative implicit neural encoding network (MINE) to encode the surface as a compact neural representation. MINE combines sinusoidal activation functions and multiplicative operators to enhance the capability and distribution characteristics of the network. The overfitting process transforms the mapping function from point cloud coordinates to implicit distance fields into a neural network, which is subsequently utilized for model compression. Through the decompressed network, the continuous surface is reconstructed using the adaptive marching cubes algorithm (AMC), which incorporates a dual-layer surface fusion process to further enhance the accuracy of surface extraction for SLDF.ResultWe compared our algorithm with six state-of-the-art algorithms, including the surface compression approaches based on implicit representation and point cloud compression methods, on three public datasets, namely, ABC, Famous, and MPEG PCC. The quantitative evaluation metrics included the rate-distortion curves of chamfer-L1 distance (L1-CD), normal consistency (NC), F-score for continuous point cloud surface, and the rate-distortion curve of D1-PSNR for quantized point cloud surface. Compared with the suboptimal method (i.e., INR), our proposed method reduces L1-CD loss by 12.4% and improves the NC and F-score performance by 1.5% and 13.6% on the ABC and Famous datasets, respectively. Moreover, the compression efficiency increases by an average of 12.9% along with the growth of model parameters. On multiple MPEG PCC datasets with samples taken from the 512-resolution MVUB dataset, 1024-resolution 8iVFB dataset, and 2048-resolution Owlii dataset, our method achieves a D1-PSNR performance of over 55 dB within the 10 KB range, which highlights its higher effective compression limit compared with G-PCC. Ablation experiments show that in the absence of SLDF, the L1-CD loss increases by 18.53%, while the D1-PSNR performance increases by 15 dB. Similarly, without the MINE network, the L1-CD loss increases by 3.72%, and the D1-PSNR performance increases by 2.67 dB.ConclusionThis work explores the implicit representation for point cloud surfaces and proposes an enhanced point cloud compression framework. We initially design SLDF to extend the implicit representations of arbitrary topologies in point clouds, and then we use the multiplicative branches network to enhance the capability and distribution characteristics of the network. We then apply a surface extraction algorithm to enhance the quality of the reconstructed point cloud. In this way, we obtain a unified framework for the geometric compression of point cloud surfaces at arbitrary resolutions. Experimental results demonstrate that our proposed method achieves a promising performance in point cloud geometry compression.关键词:point cloud geometry compression;implicit representation;surface reconstruction;model compression;surface extraction algorithm

摘要:ObjectivePoint clouds captured by depth sensors or generated by reconstruction algorithms are essential for various 3D vision tasks, including 3D scene understanding, scan registration, and 3D reconstruction. However, a simple scene or object contains massive amounts of unstructured points, leading to challenges in the storage and transmission of these point cloud data. Therefore, developing point cloud geometry compression algorithms is important to effectively handle and process point cloud data. Existing point cloud compression algorithms typically involve converting point clouds into a storage-efficient data structure, such as an octree representation or sparse points with latent features. These intermediate representations are then encoded as a compact bitstream by using either handcrafted or learning-based entropy coders. Although the correlation of spatial points effectively improves compression performance, existing algorithms may not fully exploit these points as representations of the object surface and topology. Recent studies have addressed this problem by exploring implicit representations and neural networks for surface reconstruction. However, these methods are primarily tailored for 3D objects represented as occupancy fields and signed distance fields, thus limiting their applicability to point clouds and non-watertight meshes in terms of surface representation and reconstruction. Furthermore, the neural networks used in these approaches often rely on simple multi-layer perceptron structures, which may lack capacity and compression efficiency for point cloud geometry compression tasks.MethodTo deal with these limitations, we proposed a novel point cloud geometry compression framework, including a signed logarithmic distance field, an implicit network structure with the multiplicative branches, and an adaptive marching cube algorithm for surface extraction. First, the point cloud surface (serving as the zero level-set) maps the arbitrary points in space to the distance values of their nearest points on the point cloud surface. We design an implicit representation called signed logarithmic distance field (SLDF), which utilizes the thickness assumption and logarithmic parameterization to fit arbitrary point cloud surfaces. Afterward, we apply a multiplicative implicit neural encoding network (MINE) to encode the surface as a compact neural representation. MINE combines sinusoidal activation functions and multiplicative operators to enhance the capability and distribution characteristics of the network. The overfitting process transforms the mapping function from point cloud coordinates to implicit distance fields into a neural network, which is subsequently utilized for model compression. Through the decompressed network, the continuous surface is reconstructed using the adaptive marching cubes algorithm (AMC), which incorporates a dual-layer surface fusion process to further enhance the accuracy of surface extraction for SLDF.ResultWe compared our algorithm with six state-of-the-art algorithms, including the surface compression approaches based on implicit representation and point cloud compression methods, on three public datasets, namely, ABC, Famous, and MPEG PCC. The quantitative evaluation metrics included the rate-distortion curves of chamfer-L1 distance (L1-CD), normal consistency (NC), F-score for continuous point cloud surface, and the rate-distortion curve of D1-PSNR for quantized point cloud surface. Compared with the suboptimal method (i.e., INR), our proposed method reduces L1-CD loss by 12.4% and improves the NC and F-score performance by 1.5% and 13.6% on the ABC and Famous datasets, respectively. Moreover, the compression efficiency increases by an average of 12.9% along with the growth of model parameters. On multiple MPEG PCC datasets with samples taken from the 512-resolution MVUB dataset, 1024-resolution 8iVFB dataset, and 2048-resolution Owlii dataset, our method achieves a D1-PSNR performance of over 55 dB within the 10 KB range, which highlights its higher effective compression limit compared with G-PCC. Ablation experiments show that in the absence of SLDF, the L1-CD loss increases by 18.53%, while the D1-PSNR performance increases by 15 dB. Similarly, without the MINE network, the L1-CD loss increases by 3.72%, and the D1-PSNR performance increases by 2.67 dB.ConclusionThis work explores the implicit representation for point cloud surfaces and proposes an enhanced point cloud compression framework. We initially design SLDF to extend the implicit representations of arbitrary topologies in point clouds, and then we use the multiplicative branches network to enhance the capability and distribution characteristics of the network. We then apply a surface extraction algorithm to enhance the quality of the reconstructed point cloud. In this way, we obtain a unified framework for the geometric compression of point cloud surfaces at arbitrary resolutions. Experimental results demonstrate that our proposed method achieves a promising performance in point cloud geometry compression.关键词:point cloud geometry compression;implicit representation;surface reconstruction;model compression;surface extraction algorithm- 3

- |

- 0

- |

- 0

发布时间:2024-12-16 -