最新刊期

卷 30 , 期 2 , 2025

- “在三维视觉领域,点云补全算法取得重要进展,专家系统综述了深度学习背景下的点云补全技术,为3D重建等研究提供重要参考。”

摘要:Point clouds have become the main form of 3D vision because of their rich information expression ability. However, the actual collected point cloud data are often sparse or incomplete due to the characteristics of the measured object, the performance of the measuring instrument, and environmental and human factors, which seriously affect the subsequent processing of the point cloud. The point cloud completion algorithm aims to reconstruct a complete point cloud model from incomplete point cloud data, which is an important research basis for 3D reconstruction, object detection, and shape classification. With the rapid development of deep learning methods, their efficient feature extraction ability and excellent data processing ability have led them to be widely used in 3D point cloud algorithms. At present, point cloud completion algorithms based on deep learning have gradually become a research hotspot in the field of 3D point clouds. However, challenges such as model structure, accuracy, and efficiency in completion tasks hinder the development of point cloud completion algorithms. Examples include the problems of missing key structural information, fine-grained reconstruction, and inefficiency of the algorithm model. This study systematically reviews point cloud completion algorithms in the context of deep learning. First, according to the network input modality, the point cloud completion algorithms are divided into two categories, namely, single-modality-based methods and multimodality-based methods. Then, according to the representation of 3D data, the methods based on a single modality are divided into three categories, namely, voxel-based methods, view-based methods, and point-based methods. The classical methods and the latest methods are systematically analyzed and summarized. The method characteristics and network performance of point cloud completion algorithms under various models were reviewed. Then, practical application analysis of the multimodal method is conducted, and the performance of the algorithm is compared with that of the diffusion model and other methods. Then, different datasets and evaluation criteria commonly used in point cloud completion tasks are summarized, the performance of existing point cloud completion algorithms based on deep learning on real datasets and synthetic datasets with a variety of evaluation criteria is compared, and the performance of existing point cloud completion algorithms is analyzed. Finally, according to the advantages and disadvantages of each classification, the future development and research trends of point cloud completion algorithms in the field of deep learning are proposed. The research results are as follows: since the concept of the point cloud completion algorithm was proposed in 2018, most methods based on a single mode use the point method for completion and combine hotspot models for algorithm optimization, such as generative adversarial networks (GANs), Transformer models, and the Mamba model. Multimodal methods have developed rapidly since they were proposed in 2021, especially since the diffusion model was applied to the point cloud completion algorithm, which truly realizes multimodal input and output. Many researchers have explored multimodal information fusion at the feature level to improve the model accuracy of the completion algorithm. This scheme also provides an updated algorithm theoretical basis for multivehicle cooperative intelligent perception technology in robotics and autonomous driving. Point cloud completion based on multimodal methods is also the development trend of point cloud completion algorithms in the future. Through a comprehensive survey and review of point cloud completion algorithms based on deep learning, the current research results have improved the ability of point cloud data feature extraction and model generation to a certain extent. However, the following research difficulties still exist: 1) features and fine-grained methods: at present, most algorithms are dedicated to making full use of structural information for prediction and generating fine-grained and more complete point cloud shapes. Multiple fusion operations of the geometric structure and attribute information based on the point cloud data structure must still be performed to enrich the high-quality generation of point cloud data. 2) Multimodal data fusion: point cloud data are usually fused with other sensor data to obtain more comprehensive information, such as RGB images and depth images. How to improve the method of multimodal feature extraction and fusion and explore the smart fusion of multimodal data to improve the accuracy and robustness of the point cloud completion algorithm will be the difficulties of future research. In the future, the development of point cloud completion algorithms will realize that all modes from text and image to point cloud will be completely opened, and any input and output will be realized in the real sense. 3) Data augmentation and diversity: large models of point clouds will be popular research topics in the future. Improving the generalization ability and data diversity of point cloud completion algorithms in various scenarios via data augmentation or model diffusion has also become difficult in the field of point clouds. 4) Real-time and interactivity: real-time requirements limit the development of point cloud completion algorithms in applications such as autonomous driving and robotics. The high complexity of the algorithm, the difficulty of multimodal feature information fusion, and the difficulty of large-scale data processing make the algorithm model inefficient, resulting in poor real-time performance. How to reduce the size of the data through data preprocessing, downsampling, and selecting a relatively lightweight model structure, such as the Mamba model, to improve model efficiency was investigated. Moreover, the rapid adjustment and optimization of high-quality point cloud completion results according to user interaction information will be difficult for future development. A systematic review of point cloud completion algorithms against the background of deep learning provides important reference value for researchers of completion algorithms in the field of 3D vision.关键词:point cloud completion;voxel-based method;multimodal-based method;Transformer model;diffusion model483|485|0更新时间:2025-04-11

摘要:Point clouds have become the main form of 3D vision because of their rich information expression ability. However, the actual collected point cloud data are often sparse or incomplete due to the characteristics of the measured object, the performance of the measuring instrument, and environmental and human factors, which seriously affect the subsequent processing of the point cloud. The point cloud completion algorithm aims to reconstruct a complete point cloud model from incomplete point cloud data, which is an important research basis for 3D reconstruction, object detection, and shape classification. With the rapid development of deep learning methods, their efficient feature extraction ability and excellent data processing ability have led them to be widely used in 3D point cloud algorithms. At present, point cloud completion algorithms based on deep learning have gradually become a research hotspot in the field of 3D point clouds. However, challenges such as model structure, accuracy, and efficiency in completion tasks hinder the development of point cloud completion algorithms. Examples include the problems of missing key structural information, fine-grained reconstruction, and inefficiency of the algorithm model. This study systematically reviews point cloud completion algorithms in the context of deep learning. First, according to the network input modality, the point cloud completion algorithms are divided into two categories, namely, single-modality-based methods and multimodality-based methods. Then, according to the representation of 3D data, the methods based on a single modality are divided into three categories, namely, voxel-based methods, view-based methods, and point-based methods. The classical methods and the latest methods are systematically analyzed and summarized. The method characteristics and network performance of point cloud completion algorithms under various models were reviewed. Then, practical application analysis of the multimodal method is conducted, and the performance of the algorithm is compared with that of the diffusion model and other methods. Then, different datasets and evaluation criteria commonly used in point cloud completion tasks are summarized, the performance of existing point cloud completion algorithms based on deep learning on real datasets and synthetic datasets with a variety of evaluation criteria is compared, and the performance of existing point cloud completion algorithms is analyzed. Finally, according to the advantages and disadvantages of each classification, the future development and research trends of point cloud completion algorithms in the field of deep learning are proposed. The research results are as follows: since the concept of the point cloud completion algorithm was proposed in 2018, most methods based on a single mode use the point method for completion and combine hotspot models for algorithm optimization, such as generative adversarial networks (GANs), Transformer models, and the Mamba model. Multimodal methods have developed rapidly since they were proposed in 2021, especially since the diffusion model was applied to the point cloud completion algorithm, which truly realizes multimodal input and output. Many researchers have explored multimodal information fusion at the feature level to improve the model accuracy of the completion algorithm. This scheme also provides an updated algorithm theoretical basis for multivehicle cooperative intelligent perception technology in robotics and autonomous driving. Point cloud completion based on multimodal methods is also the development trend of point cloud completion algorithms in the future. Through a comprehensive survey and review of point cloud completion algorithms based on deep learning, the current research results have improved the ability of point cloud data feature extraction and model generation to a certain extent. However, the following research difficulties still exist: 1) features and fine-grained methods: at present, most algorithms are dedicated to making full use of structural information for prediction and generating fine-grained and more complete point cloud shapes. Multiple fusion operations of the geometric structure and attribute information based on the point cloud data structure must still be performed to enrich the high-quality generation of point cloud data. 2) Multimodal data fusion: point cloud data are usually fused with other sensor data to obtain more comprehensive information, such as RGB images and depth images. How to improve the method of multimodal feature extraction and fusion and explore the smart fusion of multimodal data to improve the accuracy and robustness of the point cloud completion algorithm will be the difficulties of future research. In the future, the development of point cloud completion algorithms will realize that all modes from text and image to point cloud will be completely opened, and any input and output will be realized in the real sense. 3) Data augmentation and diversity: large models of point clouds will be popular research topics in the future. Improving the generalization ability and data diversity of point cloud completion algorithms in various scenarios via data augmentation or model diffusion has also become difficult in the field of point clouds. 4) Real-time and interactivity: real-time requirements limit the development of point cloud completion algorithms in applications such as autonomous driving and robotics. The high complexity of the algorithm, the difficulty of multimodal feature information fusion, and the difficulty of large-scale data processing make the algorithm model inefficient, resulting in poor real-time performance. How to reduce the size of the data through data preprocessing, downsampling, and selecting a relatively lightweight model structure, such as the Mamba model, to improve model efficiency was investigated. Moreover, the rapid adjustment and optimization of high-quality point cloud completion results according to user interaction information will be difficult for future development. A systematic review of point cloud completion algorithms against the background of deep learning provides important reference value for researchers of completion algorithms in the field of 3D vision.关键词:point cloud completion;voxel-based method;multimodal-based method;Transformer model;diffusion model483|485|0更新时间:2025-04-11 - “风格化数字人技术在计算机图形学等领域迅速发展,专家系统性综述了其风格化生成、多模态驱动与用户交互的研究进展,为后续研究提供参考。”

摘要:Stylized digital characters have emerged as a fundamental force in reshaping the landscape of computer graphics, visual arts, and game design. Their unparalleled ability to mimic human appearance and behavior, coupled with their flexibility in adapting to a wide array of artistic styles and narrative frameworks, underscores their growing importance in crafting immersive and engaging digital experiences. This comprehensive exploration delves deeply into the complex world of stylized digital humans, explores their current development status, identifies the latest trends, and addresses the pressing challenges that lie ahead in three foundational research domains: the creation of stylized digital humans, multimodal driving mechanisms, and user interaction modalities. The first domain, creation of stylized digital humans, examines the innovative methodologies employed in generating lifelike but stylistically diverse characters that can seamlessly integrate into various digital environments. From advancements in 3D modeling and texturing to the integration of artificial intelligence for dynamic character development, this section provides a thorough analysis of the tools and technologies that are pushing the boundaries of what digital characters can achieve. In the realm of multimodal driving mechanisms, this study investigates evolving techniques for animating and controlling digital humans by using a range of inputs, such as voice, gesture, and real-time motion capture. This section delves into how these mechanisms not only enhance the realism of character interactions but also open new avenues for creators to involve users in interactive narratives in more meaningful ways. Finally, the discussion of user interaction modalities explores the various ways in which end-users can engage with and influence the behavior of digital humans. From immersive virtual and augmented reality experiences to interactive web and mobile platforms, this segment evaluates the effectiveness of different modalities in creating a two-way interaction that enriches the user’s experience and deepens their connection to digital characters. At the heart of this exploration lies the creation of stylized digital humans, a field that has witnessed remarkable progress in recent years. The generation of these characters can be broadly classified into two categories: explicit 3D models and implicit 3D models. Explicit 3D digital human stylization encompasses a range of methodologies, including optimization-based approaches that meticulously refine digital meshes to conform to specific stylistic attributes. These techniques often involve iterative processes that adjust geometric details, textures, and lighting to achieve the desired aesthetic. Generative adversarial networks, as cornerstones of deep learning, have revolutionized this landscape by enabling the automatic generation of novel stylized forms that capture intricate nuances of various artistic styles. Furthermore, engine-based methods harness the power of advanced rendering engines to apply artistic filters and affect real time, offering unparalleled flexibility and control over the final visual output. Implicit 3D digital human stylization draws inspiration from the realm of implicit scene stylization, particularly via neural implicit representations. These approaches offer a more holistic and flexible approach for representing and manipulating 3D geometry and appearance, enabling stylization that transcends traditional mesh-based limitations. Within this framework, facial stylization holds a special place, requiring a profound understanding of facial anatomy, expression dynamics, and cultural nuances. Specialized methods have been developed to capture and manipulate facial features in a nuanced and artistic manner, fostering a level of realism and emotional expressiveness that is crucial for believable digital humans. Animating and controlling the behavior of stylized digital humans necessitates the use of diverse driving signals, which serve as the lifeblood of these virtual beings. This study delves into three primary sources of these signals: explicit audio drivers, text drivers, and video drivers. Audio drivers leverage speech recognition and prosody analysis to synchronize digital human movements with spoken language, enabling them to lip-sync and gesture in a natural and expressive manner. Conversely, text drivers rely on natural language processing (NLP) techniques to interpret textual commands or prompts and convert them into coherent actions, allowing for a more directive form of control. Video drivers, which are perhaps the most advanced in terms of realism, employ computer vision algorithms to track and mimic the movements of real-world actors, providing a seamless bridge between the virtual and physical worlds. These drivers are supported by sophisticated implementation algorithms, many of which rely on intermediate variable-driven coding-decoding structures. Keypoint-based methods play a pivotal role in capturing and transferring motion, allowing for the precise replication of movements across different characters. Moreover, 3D face-based approaches focus on facial animation and utilize detailed facial models and advanced animation techniques to achieve unparalleled realism in expressions and emotions. Meanwhile, optical flow-based techniques offer a holistic approach to motion estimation, synthesis, capture, and reproduction of complex motion patterns across the entire digital human body. The true magic of stylized digital humans lies in their ability to engage with users in meaningful and natural interactions. Voice interaction, currently the mainstream mode of communication, relies heavily on automatic speech recognition for accurate speech-to-text conversion and text-to-speech synthesis for generating natural-sounding synthetic speech. The dialog system module, a cornerstone of virtual human interaction, emphasizes the importance of natural language understanding for interpreting user inputs and natural language generation for crafting appropriate responses. When these capabilities are seamlessly integrated, stylized digital humans are capable of engaging in fluid and contextually relevant conversations with users, fostering a sense of intimacy and connection. The study of stylized digital characters will likely continue its ascendancy, fueled by advancements in deep learning, computer vision, and NLP. Future research may delve into integrating multiple modalities for richer and more nuanced interactions, pushing the boundaries of what is possible in virtual human communication. Innovative stylization techniques that bridge the gap between reality and fiction will also be explored, enabling the creation of digital humans that are both fantastic and relatable. Moreover, the development of intelligent agents capable of autonomous creativity and learning will revolutionize the way stylized digital humans can contribute to various industries, including entertainment, education, healthcare, and beyond. As technology continues to evolve, stylized digital humans will undoubtedly play an increasingly substantial role in shaping how people engage with digital content and with each other, ushering in a new era of digital creativity and expression. This study serves as a valuable resource for researchers and practitioners alike, offering a comprehensive overview of the current state of the art and guiding the way forward in this dynamic, exciting field.关键词:stylization;digital characters;face driven;human-computer interaction;3D modeling;deep learning;neural network297|339|0更新时间:2025-04-11

摘要:Stylized digital characters have emerged as a fundamental force in reshaping the landscape of computer graphics, visual arts, and game design. Their unparalleled ability to mimic human appearance and behavior, coupled with their flexibility in adapting to a wide array of artistic styles and narrative frameworks, underscores their growing importance in crafting immersive and engaging digital experiences. This comprehensive exploration delves deeply into the complex world of stylized digital humans, explores their current development status, identifies the latest trends, and addresses the pressing challenges that lie ahead in three foundational research domains: the creation of stylized digital humans, multimodal driving mechanisms, and user interaction modalities. The first domain, creation of stylized digital humans, examines the innovative methodologies employed in generating lifelike but stylistically diverse characters that can seamlessly integrate into various digital environments. From advancements in 3D modeling and texturing to the integration of artificial intelligence for dynamic character development, this section provides a thorough analysis of the tools and technologies that are pushing the boundaries of what digital characters can achieve. In the realm of multimodal driving mechanisms, this study investigates evolving techniques for animating and controlling digital humans by using a range of inputs, such as voice, gesture, and real-time motion capture. This section delves into how these mechanisms not only enhance the realism of character interactions but also open new avenues for creators to involve users in interactive narratives in more meaningful ways. Finally, the discussion of user interaction modalities explores the various ways in which end-users can engage with and influence the behavior of digital humans. From immersive virtual and augmented reality experiences to interactive web and mobile platforms, this segment evaluates the effectiveness of different modalities in creating a two-way interaction that enriches the user’s experience and deepens their connection to digital characters. At the heart of this exploration lies the creation of stylized digital humans, a field that has witnessed remarkable progress in recent years. The generation of these characters can be broadly classified into two categories: explicit 3D models and implicit 3D models. Explicit 3D digital human stylization encompasses a range of methodologies, including optimization-based approaches that meticulously refine digital meshes to conform to specific stylistic attributes. These techniques often involve iterative processes that adjust geometric details, textures, and lighting to achieve the desired aesthetic. Generative adversarial networks, as cornerstones of deep learning, have revolutionized this landscape by enabling the automatic generation of novel stylized forms that capture intricate nuances of various artistic styles. Furthermore, engine-based methods harness the power of advanced rendering engines to apply artistic filters and affect real time, offering unparalleled flexibility and control over the final visual output. Implicit 3D digital human stylization draws inspiration from the realm of implicit scene stylization, particularly via neural implicit representations. These approaches offer a more holistic and flexible approach for representing and manipulating 3D geometry and appearance, enabling stylization that transcends traditional mesh-based limitations. Within this framework, facial stylization holds a special place, requiring a profound understanding of facial anatomy, expression dynamics, and cultural nuances. Specialized methods have been developed to capture and manipulate facial features in a nuanced and artistic manner, fostering a level of realism and emotional expressiveness that is crucial for believable digital humans. Animating and controlling the behavior of stylized digital humans necessitates the use of diverse driving signals, which serve as the lifeblood of these virtual beings. This study delves into three primary sources of these signals: explicit audio drivers, text drivers, and video drivers. Audio drivers leverage speech recognition and prosody analysis to synchronize digital human movements with spoken language, enabling them to lip-sync and gesture in a natural and expressive manner. Conversely, text drivers rely on natural language processing (NLP) techniques to interpret textual commands or prompts and convert them into coherent actions, allowing for a more directive form of control. Video drivers, which are perhaps the most advanced in terms of realism, employ computer vision algorithms to track and mimic the movements of real-world actors, providing a seamless bridge between the virtual and physical worlds. These drivers are supported by sophisticated implementation algorithms, many of which rely on intermediate variable-driven coding-decoding structures. Keypoint-based methods play a pivotal role in capturing and transferring motion, allowing for the precise replication of movements across different characters. Moreover, 3D face-based approaches focus on facial animation and utilize detailed facial models and advanced animation techniques to achieve unparalleled realism in expressions and emotions. Meanwhile, optical flow-based techniques offer a holistic approach to motion estimation, synthesis, capture, and reproduction of complex motion patterns across the entire digital human body. The true magic of stylized digital humans lies in their ability to engage with users in meaningful and natural interactions. Voice interaction, currently the mainstream mode of communication, relies heavily on automatic speech recognition for accurate speech-to-text conversion and text-to-speech synthesis for generating natural-sounding synthetic speech. The dialog system module, a cornerstone of virtual human interaction, emphasizes the importance of natural language understanding for interpreting user inputs and natural language generation for crafting appropriate responses. When these capabilities are seamlessly integrated, stylized digital humans are capable of engaging in fluid and contextually relevant conversations with users, fostering a sense of intimacy and connection. The study of stylized digital characters will likely continue its ascendancy, fueled by advancements in deep learning, computer vision, and NLP. Future research may delve into integrating multiple modalities for richer and more nuanced interactions, pushing the boundaries of what is possible in virtual human communication. Innovative stylization techniques that bridge the gap between reality and fiction will also be explored, enabling the creation of digital humans that are both fantastic and relatable. Moreover, the development of intelligent agents capable of autonomous creativity and learning will revolutionize the way stylized digital humans can contribute to various industries, including entertainment, education, healthcare, and beyond. As technology continues to evolve, stylized digital humans will undoubtedly play an increasingly substantial role in shaping how people engage with digital content and with each other, ushering in a new era of digital creativity and expression. This study serves as a valuable resource for researchers and practitioners alike, offering a comprehensive overview of the current state of the art and guiding the way forward in this dynamic, exciting field.关键词:stylization;digital characters;face driven;human-computer interaction;3D modeling;deep learning;neural network297|339|0更新时间:2025-04-11

Review

- “在高空无人机多模态目标跟踪领域,专家构建了HiAl数据集,旨在推动相关技术研究。”

摘要:ObjectiveUnmanned aerial vehicles (UAVs) have become crucial tools in both modern military and civilian contexts owing to their flexibility and ease of operation. High-altitude UAVs provide unique and distinct advantages over low-altitude UAVs, such as wider fields of view and stronger concealment, making them highly valuable in intelligence reconnaissance, emergency rescue, and disaster relief tasks. However, tracking objects with high-altitude UAVs introduces considerable challenges, including UAV rotation, tiny objects, complex background changes, and low object resolution. The current research on multi-modal object tracking of UAVs focuses primarily on low-altitude UAVs, such as the dataset named VTUAV (visible-thermal UAV) for multi-modal object tracking of UAVs, which is shot in low-altitude airspace of 5–20 m and can fully show the unique perspective of UAVs. However, the scenes captured by high-altitude UAVs significantly differ from those captured by low-altitude UAVs. Thus, this dataset cannot provide strong support for the development of high-altitude UAV multi-modal object tracking, which is also the bottleneck of the lack of data support in the research field of multi-modal object tracking of high-altitude UAVs. Given the lack of an evaluation dataset to evaluate the multi-modal object tracking method of high-altitude UAVs, this limitation hinders research and development in this field.MethodThis study proposes an evaluation dataset named HiAl specifically for multi-modal object tracking methods of high-altitude UAVs captured at approximately 500 m. The UAV shooting this dataset is equipped with a hybrid sensor, which can capture video in both visible and infrared modes. The collected multimodal videos with high-quality videos were registered to provide a higher level of ground truth annotation and evaluate different multi-modal object tracking methods more fairly. First, the two video modalities were manually aligned to ensure that the same tracking object in each pair of videos occupied the same position within the frame. During the registration process, ensuring accurate registration of the area where the tracking object is located is the top priority, and under this premise, other areas in the image also become roughly aligned. Then, accurate ground truth annotations are provided to each frame of the video on the basis of the high alignment of the two modalities. The horizontal annotation boxes were used to label the position of the target in a way that best fits the contour of the tracked object. In the abovementioned modal alignment, two video modalities can share the same ground truth, which allows better evaluation of different multi-modal object tracking methods under the high-altitude UAV platform. Tracking attributes, scenes, and object categories was comprehensively considered during the data collection process to ensure the diversity and authenticity of the dataset. The dataset considers different lighting conditions and weather factors, including night and foggy days, for nine common object categories in high-altitude UAV scenes. The dataset has 12 tracking attributes; two are unique to UAVs, which have rich practical significance and high challenges. In contrast to existing multimodal tracking datasets, this dataset tracks mostly small targets, which is also a realistic challenge associated with high-altitude UAV shooting.ResultThe performances of 10 mainstream multi-modal tracking methods on this dataset are compared with those on a nonhigh-altitude UAV scene dataset. This study employs common quantitative evaluation metrics, namely, the precision rate (PR) and success rate (SR), to assess the performance of each method. Taking the two outstanding methods as examples, the PR value of the template-bridged search region interaction (TBSI) method on the RGB-thermal dataset (RGBT234) reached 0.871, whereas it was only 0.527 on the dataset proposed in this study, which decreased by 39.5%; its SR value decreased from 0.637 on RGBT234 to 0.468 on the dataset proposed in this study, which decreased by 26.5%. Compared with those of RGBT234, the PR and SR of the hierarchical multi-modal fusion tracker (HMFT) on the HiAl dataset decreased by 23.6% and 14%, respectively. In addition, the dataset HiAlto was used to retrain six methods. Comparative results indicated improved performance of all the retraining methods. For example, the PR value of the duality-gated mutual condition network (DMCNet) is increased from 0.485 before training to 0.524, and the SR value increased from 0.512 before training to 0.526. These experimental results reflect the high challenge and necessity of the dataset.ConclusionAn evaluation dataset designed to assess the performance of multi-modal object tracking methods for high-altitude UAVs is introduced in this study. The multimodal data collected in the real scene and provided frame-level ground truth annotations were carefully registered to provide a dedicated dataset for high-quality multi-modal tracking of high-altitude UAVs. This proposed dataset HiAl can serve as a’standard evaluation tool for future research, offering researchers access to authentic and varied data to evaluate their algorithms performance. The experimental results of 10 mainstream tracking algorithms in HiAl with other datasets were compared, and the experimental results of retraining 6 tracking algorithms, including the limitations of existing algorithms in the multi-modal object tracking task of high-altitude UAVs, were analyzed. The potential research directions were extracted for researchers’ reference. The HiAl dataset is available at https://github.com/mmic-lcl/Datasets-and-benchmark-code/tree/main.关键词:multi-modal object tracking;high altitude unmanned aerial vehicle;small object;high quality alignment;dataset660|316|0更新时间:2025-04-11

摘要:ObjectiveUnmanned aerial vehicles (UAVs) have become crucial tools in both modern military and civilian contexts owing to their flexibility and ease of operation. High-altitude UAVs provide unique and distinct advantages over low-altitude UAVs, such as wider fields of view and stronger concealment, making them highly valuable in intelligence reconnaissance, emergency rescue, and disaster relief tasks. However, tracking objects with high-altitude UAVs introduces considerable challenges, including UAV rotation, tiny objects, complex background changes, and low object resolution. The current research on multi-modal object tracking of UAVs focuses primarily on low-altitude UAVs, such as the dataset named VTUAV (visible-thermal UAV) for multi-modal object tracking of UAVs, which is shot in low-altitude airspace of 5–20 m and can fully show the unique perspective of UAVs. However, the scenes captured by high-altitude UAVs significantly differ from those captured by low-altitude UAVs. Thus, this dataset cannot provide strong support for the development of high-altitude UAV multi-modal object tracking, which is also the bottleneck of the lack of data support in the research field of multi-modal object tracking of high-altitude UAVs. Given the lack of an evaluation dataset to evaluate the multi-modal object tracking method of high-altitude UAVs, this limitation hinders research and development in this field.MethodThis study proposes an evaluation dataset named HiAl specifically for multi-modal object tracking methods of high-altitude UAVs captured at approximately 500 m. The UAV shooting this dataset is equipped with a hybrid sensor, which can capture video in both visible and infrared modes. The collected multimodal videos with high-quality videos were registered to provide a higher level of ground truth annotation and evaluate different multi-modal object tracking methods more fairly. First, the two video modalities were manually aligned to ensure that the same tracking object in each pair of videos occupied the same position within the frame. During the registration process, ensuring accurate registration of the area where the tracking object is located is the top priority, and under this premise, other areas in the image also become roughly aligned. Then, accurate ground truth annotations are provided to each frame of the video on the basis of the high alignment of the two modalities. The horizontal annotation boxes were used to label the position of the target in a way that best fits the contour of the tracked object. In the abovementioned modal alignment, two video modalities can share the same ground truth, which allows better evaluation of different multi-modal object tracking methods under the high-altitude UAV platform. Tracking attributes, scenes, and object categories was comprehensively considered during the data collection process to ensure the diversity and authenticity of the dataset. The dataset considers different lighting conditions and weather factors, including night and foggy days, for nine common object categories in high-altitude UAV scenes. The dataset has 12 tracking attributes; two are unique to UAVs, which have rich practical significance and high challenges. In contrast to existing multimodal tracking datasets, this dataset tracks mostly small targets, which is also a realistic challenge associated with high-altitude UAV shooting.ResultThe performances of 10 mainstream multi-modal tracking methods on this dataset are compared with those on a nonhigh-altitude UAV scene dataset. This study employs common quantitative evaluation metrics, namely, the precision rate (PR) and success rate (SR), to assess the performance of each method. Taking the two outstanding methods as examples, the PR value of the template-bridged search region interaction (TBSI) method on the RGB-thermal dataset (RGBT234) reached 0.871, whereas it was only 0.527 on the dataset proposed in this study, which decreased by 39.5%; its SR value decreased from 0.637 on RGBT234 to 0.468 on the dataset proposed in this study, which decreased by 26.5%. Compared with those of RGBT234, the PR and SR of the hierarchical multi-modal fusion tracker (HMFT) on the HiAl dataset decreased by 23.6% and 14%, respectively. In addition, the dataset HiAlto was used to retrain six methods. Comparative results indicated improved performance of all the retraining methods. For example, the PR value of the duality-gated mutual condition network (DMCNet) is increased from 0.485 before training to 0.524, and the SR value increased from 0.512 before training to 0.526. These experimental results reflect the high challenge and necessity of the dataset.ConclusionAn evaluation dataset designed to assess the performance of multi-modal object tracking methods for high-altitude UAVs is introduced in this study. The multimodal data collected in the real scene and provided frame-level ground truth annotations were carefully registered to provide a dedicated dataset for high-quality multi-modal tracking of high-altitude UAVs. This proposed dataset HiAl can serve as a’standard evaluation tool for future research, offering researchers access to authentic and varied data to evaluate their algorithms performance. The experimental results of 10 mainstream tracking algorithms in HiAl with other datasets were compared, and the experimental results of retraining 6 tracking algorithms, including the limitations of existing algorithms in the multi-modal object tracking task of high-altitude UAVs, were analyzed. The potential research directions were extracted for researchers’ reference. The HiAl dataset is available at https://github.com/mmic-lcl/Datasets-and-benchmark-code/tree/main.关键词:multi-modal object tracking;high altitude unmanned aerial vehicle;small object;high quality alignment;dataset660|316|0更新时间:2025-04-11 - “在人物图像生成领域,专家构建了大规模高清人物图像数据集PersonHD,为高分辨率姿态引导图像生成提供新平台。”

摘要:ObjectivePose-guided person image generation has attracted considerable attention because of its wide application potential. In the early stages of development, researchers relied mainly on manually designing features and models, matching key points between different characters, and then achieving pose transfer via interpolation or transformation. With the rapid development of deep learning technology, the emergence of generative adversarial networks (GANs) has led to considerable progress in posture transfer. GANs can learn and generate realistic images, and variants of related generative adversarial networks have been widely used in pose transfer tasks. Moreover, deep learning has made progress in key point detection technology. Advanced key point detection models, such as OpenPose, can more accurately capture human pose information, providing tremendous assistance for the development of algorithms in related fields and the construction of datasets. Recent works have achieved great success in pose-guided person image generation tasks with low-definition scenes. However, in high-resolution scenes, existing human pose transfer datasets suffer from low resolution or poor diversity, and relevant high-resolution image generation methods are lacking. This issue is addressed by constructing a large-scale high-definition human image dataset named PersonHD with multimodal auxiliary data.MethodThis study constructs a large-scale, high-resolution human image dataset called PersonHD. Compared with other datasets, this dataset has several advantages. 1) Higher image resolution: the cropped human images in PersonHD have a resolution of 1 520 × 880 pixels. 2) More diverse pose variations: the actions of the subjects are closer to real-life scenarios, introducing more fine-grained nonrigid deformation of the human body. 3) Larger image size. The PersonHD dataset contains 299 817 images from 100 different people in 4 000 videos. On the basis of the proposed PersonHD, this study further constructs two benchmarks and designs a practical high-resolution human image generation framework. Given that most existing works address human images with a resolution of 256 × 256 pixels, this study first establishes a low-resolution (256 × 256 pixels) benchmark for general evaluation, evaluates the performance of these methods on the PersonHD dataset, and further improves the performance of the state-of-the-art methods. This study also constructs a high-definition benchmark (512 × 512 pixels) to verify the performance of the state-of-the-art methods on the PersonHD dataset. These two benchmarks also enable this study to rigorously evaluate the performance of existing and future human pose transfer methods. In addition, this study proposes a practical framework to generate higher-resolution and higher-quality human images. In particular, this study first designs semantically enhanced partwise augmentation to solve the challenging overfitting problem in human image generation. A conditional upsampling module is then introduced for the generation and further refinement of high-resolution images.ResultCompared with existing datasets, PersonHD has significant advantages in terms of higher image resolution, more diverse pose variance, and larger sample sizes. On the PersonHD dataset, experiments systematically evaluate the current representative pose-guided character image generation methods on two different resolution evaluation benchmarks and systematically validate the effectiveness of each module of the framework proposed in this study. The experiment used five indicators to quantitatively analyze the performance of the model, including the structure similarity index measure(SSIM), Frechet inception distance(FID), learned perceptual image patch similarity(LPIPS), mask LPIPS, and percentage of correct keypoints with head-normalization(PCKh). For low-resolution benchmarks, most current methods are designed for low-resolution images of size 256 × 256 pixels. The image size was adjusted to 256 × 256 pixels during the experiment to evaluate these methods on the PersonHD dataset. Moreover, PersonHD was split into two subsets, namely, a clean subset and a complex subset, to evaluate the processing ability of different models for different backgrounds. This method compares several of the latest methods on two subsets of PersonHD, including pose-attentional transfer network(PATN), Must-GAN, Xing-GAN, pose-guided image synthesis and editing(PISE), and semantic part-based generation network(SPGNet). During the experiment, semantically enhanced partwise augmentation and one-shot-driven personalization were used to improve the performance of SPGNet as the baseline. The semantically enhanced partwise augmentation and one-shot driven personalization proposed in this research improve the performance of SPGNet in terms of multiple indicators, and the relevant modules of the framework significantly improve the model’s performance. For high-resolution benchmarks, given the limited work on pose-guided character image generation at high resolution, this study uses a conditional upsampling module to design the most advanced SPGNet model and further improves performance by using semantically enhanced partwise augmentation methods and one-shot-driven personalization. The experimental results indicate that the framework has good performance.ConclusionA large-scale high-resolution person image dataset called PersonHD, which contains 299 817 high-quality images, was developed. Compared with existing datasets, PersonHD has significant superiority in terms of higher image resolution, more diverse pose-variance, and larger scale samples. The high-definition character image generation benchmark dataset proposed in this article has the characteristics of a large scale and strong diversity of high-resolution data, which helps to comprehensively evaluate pose-guided character image generation algorithms. Comprehensive benchmarks were established, and extensive experimental evaluations were implemented on the basis of general low-definition protocols and the first proposed high-definition protocols, which could contribute to an important platform for analyzing recent state-of-the-art person image generation methods. A unified framework for high-definition person image generation, including semantically enhanced partwise augmentation and a conditional upsampling module, was also used. Both modules are flexible and can work separately in a plug-and-play manner. The dataset and code proposed in this work are available at https://github.com/BraveGroup/PersonHD.关键词:human image synthesis;pose-guided transfer;high-definition dataset;low-definition benchmark;high definition benchmark96|127|0更新时间:2025-04-11

摘要:ObjectivePose-guided person image generation has attracted considerable attention because of its wide application potential. In the early stages of development, researchers relied mainly on manually designing features and models, matching key points between different characters, and then achieving pose transfer via interpolation or transformation. With the rapid development of deep learning technology, the emergence of generative adversarial networks (GANs) has led to considerable progress in posture transfer. GANs can learn and generate realistic images, and variants of related generative adversarial networks have been widely used in pose transfer tasks. Moreover, deep learning has made progress in key point detection technology. Advanced key point detection models, such as OpenPose, can more accurately capture human pose information, providing tremendous assistance for the development of algorithms in related fields and the construction of datasets. Recent works have achieved great success in pose-guided person image generation tasks with low-definition scenes. However, in high-resolution scenes, existing human pose transfer datasets suffer from low resolution or poor diversity, and relevant high-resolution image generation methods are lacking. This issue is addressed by constructing a large-scale high-definition human image dataset named PersonHD with multimodal auxiliary data.MethodThis study constructs a large-scale, high-resolution human image dataset called PersonHD. Compared with other datasets, this dataset has several advantages. 1) Higher image resolution: the cropped human images in PersonHD have a resolution of 1 520 × 880 pixels. 2) More diverse pose variations: the actions of the subjects are closer to real-life scenarios, introducing more fine-grained nonrigid deformation of the human body. 3) Larger image size. The PersonHD dataset contains 299 817 images from 100 different people in 4 000 videos. On the basis of the proposed PersonHD, this study further constructs two benchmarks and designs a practical high-resolution human image generation framework. Given that most existing works address human images with a resolution of 256 × 256 pixels, this study first establishes a low-resolution (256 × 256 pixels) benchmark for general evaluation, evaluates the performance of these methods on the PersonHD dataset, and further improves the performance of the state-of-the-art methods. This study also constructs a high-definition benchmark (512 × 512 pixels) to verify the performance of the state-of-the-art methods on the PersonHD dataset. These two benchmarks also enable this study to rigorously evaluate the performance of existing and future human pose transfer methods. In addition, this study proposes a practical framework to generate higher-resolution and higher-quality human images. In particular, this study first designs semantically enhanced partwise augmentation to solve the challenging overfitting problem in human image generation. A conditional upsampling module is then introduced for the generation and further refinement of high-resolution images.ResultCompared with existing datasets, PersonHD has significant advantages in terms of higher image resolution, more diverse pose variance, and larger sample sizes. On the PersonHD dataset, experiments systematically evaluate the current representative pose-guided character image generation methods on two different resolution evaluation benchmarks and systematically validate the effectiveness of each module of the framework proposed in this study. The experiment used five indicators to quantitatively analyze the performance of the model, including the structure similarity index measure(SSIM), Frechet inception distance(FID), learned perceptual image patch similarity(LPIPS), mask LPIPS, and percentage of correct keypoints with head-normalization(PCKh). For low-resolution benchmarks, most current methods are designed for low-resolution images of size 256 × 256 pixels. The image size was adjusted to 256 × 256 pixels during the experiment to evaluate these methods on the PersonHD dataset. Moreover, PersonHD was split into two subsets, namely, a clean subset and a complex subset, to evaluate the processing ability of different models for different backgrounds. This method compares several of the latest methods on two subsets of PersonHD, including pose-attentional transfer network(PATN), Must-GAN, Xing-GAN, pose-guided image synthesis and editing(PISE), and semantic part-based generation network(SPGNet). During the experiment, semantically enhanced partwise augmentation and one-shot-driven personalization were used to improve the performance of SPGNet as the baseline. The semantically enhanced partwise augmentation and one-shot driven personalization proposed in this research improve the performance of SPGNet in terms of multiple indicators, and the relevant modules of the framework significantly improve the model’s performance. For high-resolution benchmarks, given the limited work on pose-guided character image generation at high resolution, this study uses a conditional upsampling module to design the most advanced SPGNet model and further improves performance by using semantically enhanced partwise augmentation methods and one-shot-driven personalization. The experimental results indicate that the framework has good performance.ConclusionA large-scale high-resolution person image dataset called PersonHD, which contains 299 817 high-quality images, was developed. Compared with existing datasets, PersonHD has significant superiority in terms of higher image resolution, more diverse pose-variance, and larger scale samples. The high-definition character image generation benchmark dataset proposed in this article has the characteristics of a large scale and strong diversity of high-resolution data, which helps to comprehensively evaluate pose-guided character image generation algorithms. Comprehensive benchmarks were established, and extensive experimental evaluations were implemented on the basis of general low-definition protocols and the first proposed high-definition protocols, which could contribute to an important platform for analyzing recent state-of-the-art person image generation methods. A unified framework for high-definition person image generation, including semantically enhanced partwise augmentation and a conditional upsampling module, was also used. Both modules are flexible and can work separately in a plug-and-play manner. The dataset and code proposed in this work are available at https://github.com/BraveGroup/PersonHD.关键词:human image synthesis;pose-guided transfer;high-definition dataset;low-definition benchmark;high definition benchmark96|127|0更新时间:2025-04-11

Dataset

- “在计算摄影领域,专家提出了高斯—维纳表示下的稠密焦栈图生成方法,有效解决了焦栈图像稠密化难题,为景深导向的视觉应用提供关键技术。”

摘要:ObjectiveIn optical imaging systems, the depth of field (DoF) is typically limited by the properties of optical lenses, resulting in the ability to focus only on a limited region of the scene. Thus, expanding the depth of field for optical systems is a challenging task in the community for both academia and industry. For example, in computational photography, when dense focus stack images are captured, photographers can select different focal points and depths of field in postprocessing to achieve the desired artistic effects. In macro- and micro-imaging, dense focus stack images can provide clearer and more detailed images for more accurate analysis and measurement. For interactive and immersive media, dense focus stack images can provide a more realistic and immersive visual experience. However, achieving dense focus stack images also faces some challenges. First, the performance of hardware devices limits the speed and quality of image acquisition. During the shooting process, the camera needs to adjust the focus quickly and accurately and capture multiple images to build a focus stack. Therefore, high-performance cameras and adaptive autofocus algorithms are required. In addition, changes in the shooting environment, such as object motion or manual operations by photographers, can also introduce image blurring and alignment issues. These challenges are addressed by introducing the block-based Gaussian-Wiener bidirectional prediction model to provide an effective solution. When the image is embedded into blocks and the characteristics of local blocks for prediction are utilized, the computational complexity can be reduced, and the prediction accuracy can be improved. Gaussian-Wiener filtering can smooth prediction results and reduce the impact of artifacts and noise, which can improve image quality. The bidirectional prediction method combines the original sparse focal stack images(FoSIs) with the prediction results to generate dense FoSIs, thereby expanding the DoF of the optical system. The Gaussian-Wiener bidirectional prediction model provides an innovative method for capturing dense focus stack images. It can be applied to various scenarios and application fields, providing greater creative freedom and image processing capabilities for photographers, scientists, engineers, and artists.MethodThis work abstracts the FoS as a Gaussian-Wiener representation. The proposed bidirectional prediction model includes a bidirectional fitting module and a prediction generation module. On the basis of the Gaussian-Wiener representation model, a bidirectional fitting model is constructed to solve for the bidirectional prediction parameters and draw a new focal stack image. First, on the basis of the given sparse focus stack image sequence, the number ranges from near to far according to the focal length. These numbers start from 0 and are incremented according to a certain rule, such as increasing by 2 each time to ensure that all the numbers are even. These results in a set of sparse focus stack images arranged in serial order. Then, all images were divided into blocks according to predefined block sizes. The size of each block can be selected on the basis of specific needs and algorithms. The blocks located in adjacent numbers are combined to form a block pair, which becomes the most basic unit for bidirectional prediction. Before conducting bidirectional prediction, the image was preprocessed by dividing the focus stack image into blocks and recombining them into block pairs. This preprocessing process can be achieved by using image segmentation algorithms and block pair combination strategies. For each block pair, bidirectional prediction was performed to obtain the prediction parameters. These prediction parameters can be determined on the basis of specific prediction models and algorithms, such as the block-based Gaussian-Wiener bidirectional prediction model. In the bidirectional prediction module, block pairs can be used to fit the best bidirectional fitting parameters, and on this basis, the prediction generation parameters can be solved. When the information of the prediction generation parameters and block pairs is applied, new prediction blocks can be generated. Finally, when all the prediction blocks are concatenated according to their positions in the image, new prediction focus stack images can be obtained.ResultThis experiment is performed on 11 sparse focal stack images, with evaluation metrics using the peak signal-to-noise ratio (PSNR) and structural similarity index measure (SSIM). The average PSNR of the 11 sequence generated results is 40.861 dB, and the average SSIM is 0.976. Compared with the two comparison methods of generalized Gaussian and spatial coordinates, the PSNR is improved by 6.503 dB and 6.467 dB, respectively, and the SSIM is improved by 0.057 and 0.092, respectively. The average PSNR and SSIM of each sequence improved by at least 3.474 dB and 0.012, respectively.ConclusionThe experimental results show that the method proposed in this study outperforms both the subjective and objective comparison methods and has good performance on 11 different scene sequences. Combined with ablation experiments, the advantages of bidirectional prediction in the proposed method have been demonstrated. The results indicate that the proposed bidirectional prediction method can effectively generate new focal pile images and play a crucial role in visual applications that target various depths of the field.关键词:focal stack image(FoSI);prediction model;Gaussian-Wiener;representation model;bidirectional prediction111|113|0更新时间:2025-04-11

摘要:ObjectiveIn optical imaging systems, the depth of field (DoF) is typically limited by the properties of optical lenses, resulting in the ability to focus only on a limited region of the scene. Thus, expanding the depth of field for optical systems is a challenging task in the community for both academia and industry. For example, in computational photography, when dense focus stack images are captured, photographers can select different focal points and depths of field in postprocessing to achieve the desired artistic effects. In macro- and micro-imaging, dense focus stack images can provide clearer and more detailed images for more accurate analysis and measurement. For interactive and immersive media, dense focus stack images can provide a more realistic and immersive visual experience. However, achieving dense focus stack images also faces some challenges. First, the performance of hardware devices limits the speed and quality of image acquisition. During the shooting process, the camera needs to adjust the focus quickly and accurately and capture multiple images to build a focus stack. Therefore, high-performance cameras and adaptive autofocus algorithms are required. In addition, changes in the shooting environment, such as object motion or manual operations by photographers, can also introduce image blurring and alignment issues. These challenges are addressed by introducing the block-based Gaussian-Wiener bidirectional prediction model to provide an effective solution. When the image is embedded into blocks and the characteristics of local blocks for prediction are utilized, the computational complexity can be reduced, and the prediction accuracy can be improved. Gaussian-Wiener filtering can smooth prediction results and reduce the impact of artifacts and noise, which can improve image quality. The bidirectional prediction method combines the original sparse focal stack images(FoSIs) with the prediction results to generate dense FoSIs, thereby expanding the DoF of the optical system. The Gaussian-Wiener bidirectional prediction model provides an innovative method for capturing dense focus stack images. It can be applied to various scenarios and application fields, providing greater creative freedom and image processing capabilities for photographers, scientists, engineers, and artists.MethodThis work abstracts the FoS as a Gaussian-Wiener representation. The proposed bidirectional prediction model includes a bidirectional fitting module and a prediction generation module. On the basis of the Gaussian-Wiener representation model, a bidirectional fitting model is constructed to solve for the bidirectional prediction parameters and draw a new focal stack image. First, on the basis of the given sparse focus stack image sequence, the number ranges from near to far according to the focal length. These numbers start from 0 and are incremented according to a certain rule, such as increasing by 2 each time to ensure that all the numbers are even. These results in a set of sparse focus stack images arranged in serial order. Then, all images were divided into blocks according to predefined block sizes. The size of each block can be selected on the basis of specific needs and algorithms. The blocks located in adjacent numbers are combined to form a block pair, which becomes the most basic unit for bidirectional prediction. Before conducting bidirectional prediction, the image was preprocessed by dividing the focus stack image into blocks and recombining them into block pairs. This preprocessing process can be achieved by using image segmentation algorithms and block pair combination strategies. For each block pair, bidirectional prediction was performed to obtain the prediction parameters. These prediction parameters can be determined on the basis of specific prediction models and algorithms, such as the block-based Gaussian-Wiener bidirectional prediction model. In the bidirectional prediction module, block pairs can be used to fit the best bidirectional fitting parameters, and on this basis, the prediction generation parameters can be solved. When the information of the prediction generation parameters and block pairs is applied, new prediction blocks can be generated. Finally, when all the prediction blocks are concatenated according to their positions in the image, new prediction focus stack images can be obtained.ResultThis experiment is performed on 11 sparse focal stack images, with evaluation metrics using the peak signal-to-noise ratio (PSNR) and structural similarity index measure (SSIM). The average PSNR of the 11 sequence generated results is 40.861 dB, and the average SSIM is 0.976. Compared with the two comparison methods of generalized Gaussian and spatial coordinates, the PSNR is improved by 6.503 dB and 6.467 dB, respectively, and the SSIM is improved by 0.057 and 0.092, respectively. The average PSNR and SSIM of each sequence improved by at least 3.474 dB and 0.012, respectively.ConclusionThe experimental results show that the method proposed in this study outperforms both the subjective and objective comparison methods and has good performance on 11 different scene sequences. Combined with ablation experiments, the advantages of bidirectional prediction in the proposed method have been demonstrated. The results indicate that the proposed bidirectional prediction method can effectively generate new focal pile images and play a crucial role in visual applications that target various depths of the field.关键词:focal stack image(FoSI);prediction model;Gaussian-Wiener;representation model;bidirectional prediction111|113|0更新时间:2025-04-11

Image Processing and Coding

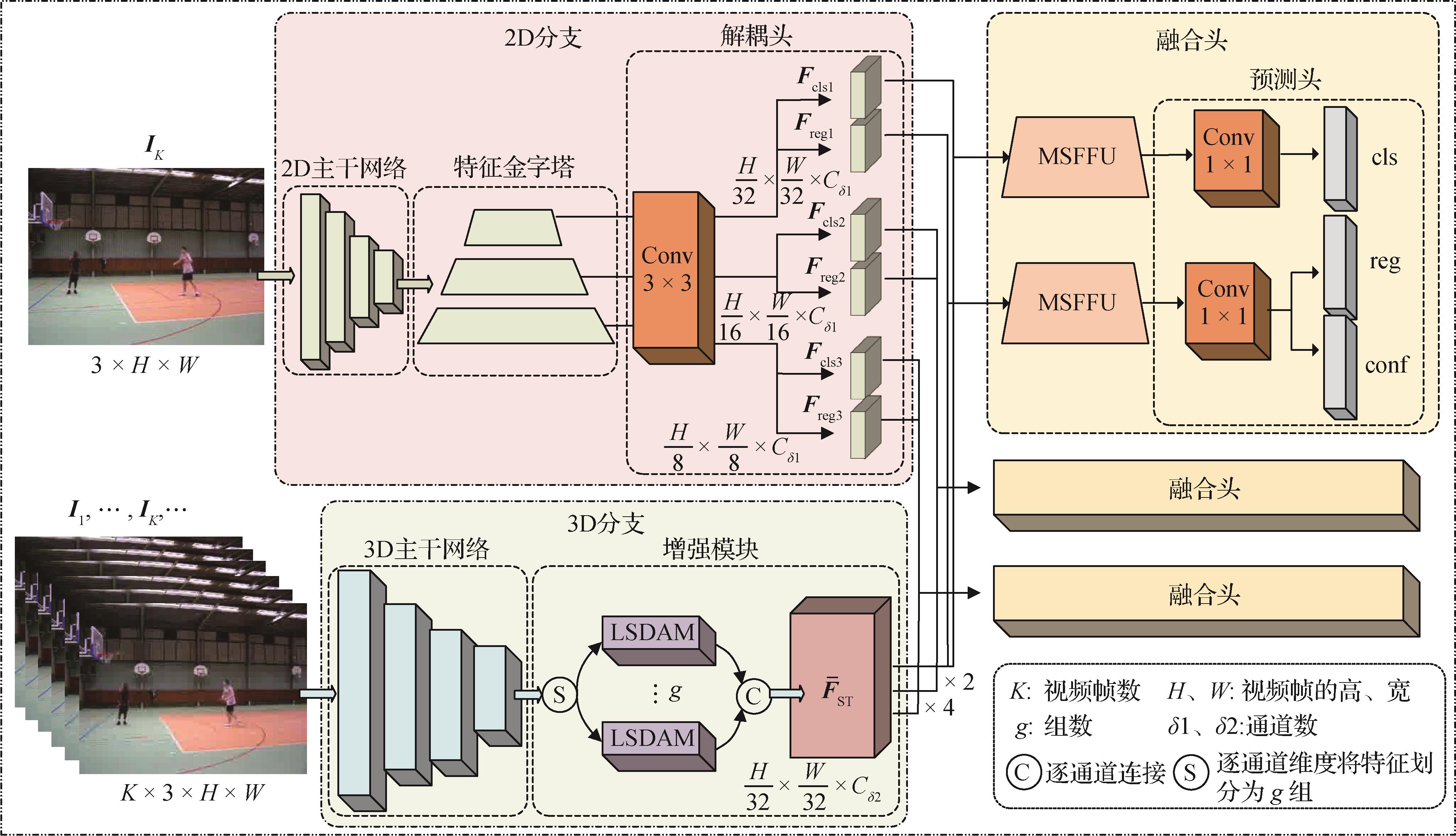

- “在动作检测领域,研究者提出了一种高效时空动作检测模型EAD,通过建模全局关键信息,提高了实时动作检测准确度。”

摘要:ObjectiveSpatial-temporal action detection (STAD) represents a significant challenge in the field of video understanding. The objective is to identify the temporal and spatial localization of actions occurring in a video and categorize related action classes. The majority of existing methods rely on the backbone for the feature modeling of video clips, which captures only local features and ignores the global contextual information of the interaction with the actors. The results are represented by a model that cannot fully comprehend the nuances of the entire scene. The current mainstream methods for real-time STAD tasks are dual-stream network-based methods. However, a simple channel-by-channel connection is typically employed to handle dual-branch network fusion, which results in a significant redundancy of the fused features and certain semantic differences in the branch features. This scheme affects the accuracy of the model. Here, an efficient STAD model called the efficient action detector (EAD), which can address the shortcomings of current methods, is proposed.MethodThe EAD model consists of three key components: the 2D branch, the 3D branch, and the fusion head. Among them, the 2D branch consists of a pretrained 2D backbone network, feature pyramid, and decoupling head; the 3D branch consists of a 3D backbone network and augmentation module; and the fusion head consists of a multiscale feature fusion unit (MSFFU) and a prediction head. First, key frames are extracted from the video clips and fed into the pretrained 2D branch backbone (YOLOv7) to detect the actors in the scene and obtain spatially decoupled features, which are classification features and localization features. Video spatial-temporal features are extracted from video clips via a pretrained lightweight video backbone network (Shufflenetv2). Second, the lightweight spatial dilated augmented module (LSDAM) uses the grouping idea to address spatial-temporal features, which serves to save resources. LSDAM consists of a dilated module (DM) and a spatial augmented module (SAM). The DM employs dilatation convolution with different dilation rates, which fully aggregates contextual feature information and reduces the loss of spatial resolution. The SAM takes the key information in the global features captured via the DM to focus and enhance the expression of the target features. The LSDAM receives the spatial-temporal features and sends them first to the DM to expand the sensory field, then subsequently to the SAM to extract the key information, and finally to the global, low-noise contextual information. Then, the enhanced features are dimensionally aligned with the spatially separated features and fed into the MSFFU for feature fusion. The MSFFU module refines the feature information via multiscale fusion and reduces the redundancy of the fused features, which enables the model to better understand the information in the whole scene. The MSFFU performs multiple levels of feature extraction for the double-branching features by utilizing different DO-Conv structures, and the individual MSFFU uses different DO-Conv structures to extract features from the dual-branch features at multiple levels, integrates each branch via element-by-element multiplication or addition, and then filters the irrelevant information in the features via a convolution operation. DO-Conv can accelerate the convergence of the network and improve the generalizability of the model, thereby improving the training speed of the model. Finally, the features at different levels are fed into the anchorless frame-based prediction head for STAD.ResultComparative detection results between EAD and existing algorithms were analyzed for the public datasets UCF101-24 and atomic visual actions (AVA) version 2.2. For the UCF101-24 dataset, frame-mAP, video-mAP, frame per second(FPS), GFLOPs, and Params are used as evaluation metrics to assess the accuracy and spatial-temporal complexity of the model. For the AVA dataset, frame-mAP and GFLOPs are used as evaluation metrics. In this work, ablation experiments on the EAD model are performed on the UCF101-24 dataset. The frame-mAP is 79.52%, and the video-mAP is 49.29% after the addition of the MSFFU, which are improvements of 0.41% and 0.14%, respectively, from the baseline. The frame-mAP is 80.96%, and the video-mAP is 49.72% after the addition of the LSDAM, which are improvements of 1.85% and 0.57%, respectively, from the baseline. The final model of the EAD frame-mAP is 80.93%, the video-mAP is 50.41%, the FPS is 53 f/s, the number of GFLOPs is 2.77, and the number of parameters is 10.92 M, which are improvements of 1.82% and 1.26%, respectively, compared with the baseline frame-averaged accuracy and video-averaged accuracy. The leakage and misdetection phenomenon of the baseline method also improved. In addition, EAD is compared with existing real-time STAD algorithms, in which the frame-mAP is improved by 0.53% and 0.43%, and the GFLOPs are reduced by 40.93 and 0.13 compared with those of YOWO and YOWOv2, respectively. On the AVA dataset, the frame-mAP and GFLOPs reach 13.74% and 2.77, respectively, for an input frame count of 16; moreover, the frame-mAP and GFLOPs reach 15.92% and 4.4%, respectively, for an input frame count of 32. Compared with other mainstream methods, EAD uses a lighter backbone network to achieve lower computational costs while achieving impressive results.ConclusionThis study proposes an STAD model called EAD, which is based on a two-stream network, to address the problems of missing global contextual information about actors’ interactions and the poor characterization of fused features. The results of the experimental results of the proposed model on the UCF101-24 and AVA datasets verify its robustness and effectiveness in STAD tasks by comparing it with the baseline and current mainstream methods. The proposed model can also be applied to the fields of intelligent monitoring, automatic driving, intelligent medical care, and other fields.关键词:deep learning;spatial-temporal action detection(STAD);two-branch network;dilated augmented module(DAM);multi-scale fusion146|135|0更新时间:2025-04-11

摘要:ObjectiveSpatial-temporal action detection (STAD) represents a significant challenge in the field of video understanding. The objective is to identify the temporal and spatial localization of actions occurring in a video and categorize related action classes. The majority of existing methods rely on the backbone for the feature modeling of video clips, which captures only local features and ignores the global contextual information of the interaction with the actors. The results are represented by a model that cannot fully comprehend the nuances of the entire scene. The current mainstream methods for real-time STAD tasks are dual-stream network-based methods. However, a simple channel-by-channel connection is typically employed to handle dual-branch network fusion, which results in a significant redundancy of the fused features and certain semantic differences in the branch features. This scheme affects the accuracy of the model. Here, an efficient STAD model called the efficient action detector (EAD), which can address the shortcomings of current methods, is proposed.MethodThe EAD model consists of three key components: the 2D branch, the 3D branch, and the fusion head. Among them, the 2D branch consists of a pretrained 2D backbone network, feature pyramid, and decoupling head; the 3D branch consists of a 3D backbone network and augmentation module; and the fusion head consists of a multiscale feature fusion unit (MSFFU) and a prediction head. First, key frames are extracted from the video clips and fed into the pretrained 2D branch backbone (YOLOv7) to detect the actors in the scene and obtain spatially decoupled features, which are classification features and localization features. Video spatial-temporal features are extracted from video clips via a pretrained lightweight video backbone network (Shufflenetv2). Second, the lightweight spatial dilated augmented module (LSDAM) uses the grouping idea to address spatial-temporal features, which serves to save resources. LSDAM consists of a dilated module (DM) and a spatial augmented module (SAM). The DM employs dilatation convolution with different dilation rates, which fully aggregates contextual feature information and reduces the loss of spatial resolution. The SAM takes the key information in the global features captured via the DM to focus and enhance the expression of the target features. The LSDAM receives the spatial-temporal features and sends them first to the DM to expand the sensory field, then subsequently to the SAM to extract the key information, and finally to the global, low-noise contextual information. Then, the enhanced features are dimensionally aligned with the spatially separated features and fed into the MSFFU for feature fusion. The MSFFU module refines the feature information via multiscale fusion and reduces the redundancy of the fused features, which enables the model to better understand the information in the whole scene. The MSFFU performs multiple levels of feature extraction for the double-branching features by utilizing different DO-Conv structures, and the individual MSFFU uses different DO-Conv structures to extract features from the dual-branch features at multiple levels, integrates each branch via element-by-element multiplication or addition, and then filters the irrelevant information in the features via a convolution operation. DO-Conv can accelerate the convergence of the network and improve the generalizability of the model, thereby improving the training speed of the model. Finally, the features at different levels are fed into the anchorless frame-based prediction head for STAD.ResultComparative detection results between EAD and existing algorithms were analyzed for the public datasets UCF101-24 and atomic visual actions (AVA) version 2.2. For the UCF101-24 dataset, frame-mAP, video-mAP, frame per second(FPS), GFLOPs, and Params are used as evaluation metrics to assess the accuracy and spatial-temporal complexity of the model. For the AVA dataset, frame-mAP and GFLOPs are used as evaluation metrics. In this work, ablation experiments on the EAD model are performed on the UCF101-24 dataset. The frame-mAP is 79.52%, and the video-mAP is 49.29% after the addition of the MSFFU, which are improvements of 0.41% and 0.14%, respectively, from the baseline. The frame-mAP is 80.96%, and the video-mAP is 49.72% after the addition of the LSDAM, which are improvements of 1.85% and 0.57%, respectively, from the baseline. The final model of the EAD frame-mAP is 80.93%, the video-mAP is 50.41%, the FPS is 53 f/s, the number of GFLOPs is 2.77, and the number of parameters is 10.92 M, which are improvements of 1.82% and 1.26%, respectively, compared with the baseline frame-averaged accuracy and video-averaged accuracy. The leakage and misdetection phenomenon of the baseline method also improved. In addition, EAD is compared with existing real-time STAD algorithms, in which the frame-mAP is improved by 0.53% and 0.43%, and the GFLOPs are reduced by 40.93 and 0.13 compared with those of YOWO and YOWOv2, respectively. On the AVA dataset, the frame-mAP and GFLOPs reach 13.74% and 2.77, respectively, for an input frame count of 16; moreover, the frame-mAP and GFLOPs reach 15.92% and 4.4%, respectively, for an input frame count of 32. Compared with other mainstream methods, EAD uses a lighter backbone network to achieve lower computational costs while achieving impressive results.ConclusionThis study proposes an STAD model called EAD, which is based on a two-stream network, to address the problems of missing global contextual information about actors’ interactions and the poor characterization of fused features. The results of the experimental results of the proposed model on the UCF101-24 and AVA datasets verify its robustness and effectiveness in STAD tasks by comparing it with the baseline and current mainstream methods. The proposed model can also be applied to the fields of intelligent monitoring, automatic driving, intelligent medical care, and other fields.关键词:deep learning;spatial-temporal action detection(STAD);two-branch network;dilated augmented module(DAM);multi-scale fusion146|135|0更新时间:2025-04-11 - “在深度伪造检测领域,专家提出了跨域人脸伪造检测模型NIG-FFD,有效提升了跨域和本域检测性能。”