最新刊期

卷 30 , 期 1 , 2025

- “在计算机视觉领域,视觉基础模型的研究进展为解决传统深度学习模型依赖大量标注数据和泛化能力不足的问题提供了新方案。”

摘要:In the field of computer vision, traditional deep learning vision models have exhibited remarkable performance on specific tasks. However, their substantial dependency on large amounts of annotated data and limited capability in generalization across new scenes significantly elevate usage costs and restrict the application scope of these models. Recently, novel model architectures centered around the Transformer, particularly in the domain of self-supervised learning, have emerged as solutions to these challenges. These models, typically pre-trained on extensive datasets, demonstrate robust generalization capabilities in complex visual scenarios and are widely recognized as vision foundation models. This paper delves into the current research status and future trends of vision foundation models, with a focus on key technological advancements in this field and their potential impact on future developments in computer vision. The paper begins by reviewing and organizing the background and developmental history of vision foundation models, followed by an introduction to the key model structures that have emerged in this developmental trajectory. The article further introduces and analyzes the design philosophies of various pre-training tasks employed in constructing vision foundation models, categorizing the existing models based on their characteristics. Additionally, the paper presents representative works in different types of vision foundation models and compiles the currently available datasets for pre-training these models. Finally, the paper summarizes the current research status of vision foundation models, reflects on existing challenges, and anticipates potential future research directions. This paper offers an expansive examination of the landscape of visual foundation models, chronicling their evolution, current achievements, and charting a course for future research. It acknowledges the Transformative impact of deep learning on computer vision, shifting the paradigm from traditional computational methods to models that excel in specialized tasks. However, it also confronts the limitations of these models, particularly their narrow applicability and reliance on extensive, meticulously annotated datasets, which have elevated deployment costs and restricted versatility. In response, the emergence of Transformer-based architectures has instigated a paradigm shift, leading to the development of vision foundation models that are redefining the capabilities and breadth of applicability of computer vision systems. This paper provides a systematic review of these models, offering historical context that underscores the transition from traditional deep learning models to the current paradigm involving Transformer-based models. It delves into the core structures of these models, such as the Transformer and vision Transformer, discussing their architectural intricacies and the principles that underpin their design, enabling them to capture the complexities of visual data with nuance and accuracy. A pivotal contribution of this paper is the thorough analysis of pre-training tasks, which is foundational to the construction of robust vision foundation models. It categorizes these tasks on the basis of their design philosophies and their effectiveness in enabling models to learn rich feature representations from large-scale datasets, thereby enhancing their generalization capabilities across a myriad of computer vision tasks. This article primarily introduces various pre-training methods such as supervised learning, image contrastive learning, image-text contrastive learning, and masked image modeling. It analyzes the characteristics of these pre-training tasks, the representative works corresponding to each, as well as their applicable scenarios and potential directions for improvement. This paper introduces existing vision foundation models based on mixture of experts according to universal visual representation backbones, aligned with language modalities, and generative multi-task models. Specifically, the article first analyzes the background of each type of foundation model, the core ideas of the models, and the pioneering work. Then, it analyzes the representative works in the development of each type of foundation model. Finally, it provides a prospect based on the strengths and weaknesses of each method. The paper also evaluates existing vision foundation models, scrutinizing their characteristics, capabilities, and the datasets utilized for their pre-training, providing an in-depth analysis of their performance on a variety of tasks, including image classification, object detection, and semantic segmentation. This paper delves into the critical component of pre-training datasets that serve as the foundational resources for the development and refinement of visual foundation models. It presents a comprehensive overview of the extensive image datasets and the burgeoning realm of image-text datasets that are instrumental in the pre-training phase of these models. The discussion commences with the seminal ImageNet dataset, which has been crucial in computer vision research and the benchmark for evaluating model performance. The paper then outlines the expansive ImageNet-21k and the colossal JFT-300M/3B datasets, highlighting their scale and the implications of such magnitude on model training and generalization capabilities. The COCO and ADE20k datasets are examined for their role in tasks such as object detection and semantic segmentation, underlining their contribution to the diversity and complexity of pre-training data. The Object365 dataset is also acknowledged for its focus on open-world target detection, offering a rich resource for model exposure to a wide array of visual categories. In addition to image datasets, the paper underscores the importance of image-text datasets such as Conceptual Captions and Flickr30k, which are becoming increasingly vital for models that integrate multimodal inputs. These datasets provide the necessary linkage between visual content and textual descriptions, enabling models to develop a deeper understanding of the semantic context. The paper anticipates research directions such as establishing comprehensive benchmark evaluation systems, enhancing cross-modal capabilities, expanding the coverage of various visual tasks, leveraging structured knowledge bases for training, and developing model compression and optimization techniques to facilitate the deployment of these models in real-world scenarios. The ultimate goal is to develop visual foundation models that are more versatile and intelligent, capable of addressing complex visual problems in the real world. Reflecting on the challenges faced by the field, the paper identifies the pressing need for more efficient training algorithms, the development of better evaluation metrics, and the integration of multimodal data. This paper also highlights several challenges, including the need for more unified model paradigms in computer vision, the development of effective performance improvement paths similar to the “scaling law” observed in NLP, and the necessity for new evaluation metrics that can assess models’ cross-modal understanding and performance across a wide range of tasks. It anticipates future research directions, such as the modularization of vision foundation models to enhance their adaptability and the exploration of weakly supervised learning techniques, which aim to diminish reliance on large annotated datasets. One of the key contributions of this paper is the in-depth discussion on the real-world applications of vision foundation models. It explores their implications for tasks such as medical image analysis, autonomous driving, and surveillance, underscoring their transformative potential and profound possible impact on these domains. In conclusion, this paper synthesizes the current state of research on vision foundation models and outlines opportunities for future advancements. It emphasizes the importance of continued interdisciplinary research to unlock the full potential of vision foundation models and address the intricate challenges in the field of computer vision. The paper’s advocacy for an interdisciplinary approach is underpinned by the belief that it will foster innovation and enable the development of models that are not only more efficient and adaptable but also capable of addressing complex and multifaceted problems in the real world.关键词:foundation model;computer vision(CV);pre-training model;self-supervised learning;multi-task learning709|467|0更新时间:2025-04-11

摘要:In the field of computer vision, traditional deep learning vision models have exhibited remarkable performance on specific tasks. However, their substantial dependency on large amounts of annotated data and limited capability in generalization across new scenes significantly elevate usage costs and restrict the application scope of these models. Recently, novel model architectures centered around the Transformer, particularly in the domain of self-supervised learning, have emerged as solutions to these challenges. These models, typically pre-trained on extensive datasets, demonstrate robust generalization capabilities in complex visual scenarios and are widely recognized as vision foundation models. This paper delves into the current research status and future trends of vision foundation models, with a focus on key technological advancements in this field and their potential impact on future developments in computer vision. The paper begins by reviewing and organizing the background and developmental history of vision foundation models, followed by an introduction to the key model structures that have emerged in this developmental trajectory. The article further introduces and analyzes the design philosophies of various pre-training tasks employed in constructing vision foundation models, categorizing the existing models based on their characteristics. Additionally, the paper presents representative works in different types of vision foundation models and compiles the currently available datasets for pre-training these models. Finally, the paper summarizes the current research status of vision foundation models, reflects on existing challenges, and anticipates potential future research directions. This paper offers an expansive examination of the landscape of visual foundation models, chronicling their evolution, current achievements, and charting a course for future research. It acknowledges the Transformative impact of deep learning on computer vision, shifting the paradigm from traditional computational methods to models that excel in specialized tasks. However, it also confronts the limitations of these models, particularly their narrow applicability and reliance on extensive, meticulously annotated datasets, which have elevated deployment costs and restricted versatility. In response, the emergence of Transformer-based architectures has instigated a paradigm shift, leading to the development of vision foundation models that are redefining the capabilities and breadth of applicability of computer vision systems. This paper provides a systematic review of these models, offering historical context that underscores the transition from traditional deep learning models to the current paradigm involving Transformer-based models. It delves into the core structures of these models, such as the Transformer and vision Transformer, discussing their architectural intricacies and the principles that underpin their design, enabling them to capture the complexities of visual data with nuance and accuracy. A pivotal contribution of this paper is the thorough analysis of pre-training tasks, which is foundational to the construction of robust vision foundation models. It categorizes these tasks on the basis of their design philosophies and their effectiveness in enabling models to learn rich feature representations from large-scale datasets, thereby enhancing their generalization capabilities across a myriad of computer vision tasks. This article primarily introduces various pre-training methods such as supervised learning, image contrastive learning, image-text contrastive learning, and masked image modeling. It analyzes the characteristics of these pre-training tasks, the representative works corresponding to each, as well as their applicable scenarios and potential directions for improvement. This paper introduces existing vision foundation models based on mixture of experts according to universal visual representation backbones, aligned with language modalities, and generative multi-task models. Specifically, the article first analyzes the background of each type of foundation model, the core ideas of the models, and the pioneering work. Then, it analyzes the representative works in the development of each type of foundation model. Finally, it provides a prospect based on the strengths and weaknesses of each method. The paper also evaluates existing vision foundation models, scrutinizing their characteristics, capabilities, and the datasets utilized for their pre-training, providing an in-depth analysis of their performance on a variety of tasks, including image classification, object detection, and semantic segmentation. This paper delves into the critical component of pre-training datasets that serve as the foundational resources for the development and refinement of visual foundation models. It presents a comprehensive overview of the extensive image datasets and the burgeoning realm of image-text datasets that are instrumental in the pre-training phase of these models. The discussion commences with the seminal ImageNet dataset, which has been crucial in computer vision research and the benchmark for evaluating model performance. The paper then outlines the expansive ImageNet-21k and the colossal JFT-300M/3B datasets, highlighting their scale and the implications of such magnitude on model training and generalization capabilities. The COCO and ADE20k datasets are examined for their role in tasks such as object detection and semantic segmentation, underlining their contribution to the diversity and complexity of pre-training data. The Object365 dataset is also acknowledged for its focus on open-world target detection, offering a rich resource for model exposure to a wide array of visual categories. In addition to image datasets, the paper underscores the importance of image-text datasets such as Conceptual Captions and Flickr30k, which are becoming increasingly vital for models that integrate multimodal inputs. These datasets provide the necessary linkage between visual content and textual descriptions, enabling models to develop a deeper understanding of the semantic context. The paper anticipates research directions such as establishing comprehensive benchmark evaluation systems, enhancing cross-modal capabilities, expanding the coverage of various visual tasks, leveraging structured knowledge bases for training, and developing model compression and optimization techniques to facilitate the deployment of these models in real-world scenarios. The ultimate goal is to develop visual foundation models that are more versatile and intelligent, capable of addressing complex visual problems in the real world. Reflecting on the challenges faced by the field, the paper identifies the pressing need for more efficient training algorithms, the development of better evaluation metrics, and the integration of multimodal data. This paper also highlights several challenges, including the need for more unified model paradigms in computer vision, the development of effective performance improvement paths similar to the “scaling law” observed in NLP, and the necessity for new evaluation metrics that can assess models’ cross-modal understanding and performance across a wide range of tasks. It anticipates future research directions, such as the modularization of vision foundation models to enhance their adaptability and the exploration of weakly supervised learning techniques, which aim to diminish reliance on large annotated datasets. One of the key contributions of this paper is the in-depth discussion on the real-world applications of vision foundation models. It explores their implications for tasks such as medical image analysis, autonomous driving, and surveillance, underscoring their transformative potential and profound possible impact on these domains. In conclusion, this paper synthesizes the current state of research on vision foundation models and outlines opportunities for future advancements. It emphasizes the importance of continued interdisciplinary research to unlock the full potential of vision foundation models and address the intricate challenges in the field of computer vision. The paper’s advocacy for an interdisciplinary approach is underpinned by the belief that it will foster innovation and enable the development of models that are not only more efficient and adaptable but also capable of addressing complex and multifaceted problems in the real world.关键词:foundation model;computer vision(CV);pre-training model;self-supervised learning;multi-task learning709|467|0更新时间:2025-04-11 - “集成电路技术发展离不开半导体晶圆,其缺陷检测对保证芯片性能至关重要。综述了晶圆缺陷检测方法的研究进展,为提高晶圆良品率和生产率提供解决方案。”

摘要:The integrated circuit chip is developed on a semiconductor wafer substrate and serves as a fundamental electronic component in many electronic devices. The semiconductor wafer, which is the foundation for manufacturing integrated circuits, contains billions of tiny electronic components. Production is gradually moving toward high-performance and small-sized directions because of the semiconductor industry’s demand for high-quality wafers. In the manufacturing process of wafers, unexpected structures may remain due to environmental, operational, and process-related reasons, leading to a decrease in the operational performance of the wafer chip. These unforeseen structures are referred to as wafer defects. The causes of wafer defects are diverse, with common types including scratches, pits, chemical stains, and dust contamination. The occurrence of unpredictable, mixed, and complex defects can result in increased production costs, decreased product yield, and a decline in manufacturing process stability. Therefore, defect detection on semiconductor wafers is crucial to ensure their performance and improve the yield of high-quality products. Currently, methods for detecting surface defects on semiconductor wafers include manual visual inspection, optical inspection, ultrasonic testing, and machine vision detection. Manual visual inspection involves operators using microscopes or the naked eye to check for obvious defects on the wafer surface, but this method has low efficiency and accuracy for detecting small defects. Optical inspection uses light sources to illuminate the wafer surface and determines the presence of defects by detecting the intensity and shape of reflected and transmitted light. This non-contact method is suitable for large-area defect detection but is less effective for detecting small and shallow defects and cannot identify internal defects within the wafer. Ultrasonic testing utilizes the propagation speed of ultrasonic waves in the wafer, along with the reflection and scattering characteristics of defects such as cracks and voids, but it is sensitive to material density and sound speed, leading to reduced detection effectiveness in specific material. In addition to these three methods, machine vision detection uses image processing and computer vision technology to detect defects on the wafer surface. It is a non-contact, high-speed, automated, cost-effective, and scalable approach, making machine vision-based detection the primary method in the wafer defect detection field today. With the development of computer vision, machine learning, and especially deep learning, the performance of defect identification and classification algorithms for semiconductor wafers has been further enhanced. This not only improves detection efficiency but also reduces production costs and enhances product quality. Machine vision-based detection methods generally fall into three categories: traditional image processing methods, machine learning methods, and deep learning methods. The development of many emerging technology fields today requires the support of integrated circuit technology. As a key role in integrated circuit chips, semiconductor wafers are prone to various defects due to complex manufacturing processes, and their failures will greatly affect the final performance of the chip and increase costs. Therefore, defect detection of semiconductor wafers is an important means to ensure its yield and productivity. Wafer defect detection combined with machine vision algorithms has strong universality and high speed and can better meet the relevant needs of industrial inspection. To have a deeper understanding of the research status of wafer defect detection, this paper conducts a comprehensive and thorough research on the defect detection method based on machine vision. Wafer manufacturing, its surface defect detection process, and the classification of wafer defects are introduced. The detection methods based on traditional image processing, machine learning, and deep learning algorithms are fully explored. Detection methods based on traditional image processing are divided according to the spatial domain and the transform domain. Detection methods based on the spatial domain include template matching, edge gradient, optical flow method, and spatial filtering method and are divided according to the correlation between the defect pattern and the template or the dissimilarity of the background. Detection methods based on the transform domain include wavelet transform, frequency filtering, and Fourier transform. Further research is being conducted on methods based on Fourier transform in the wavelet transform domain. It focuses on the application of machine learning and deep neural network in wafer defect detection. According to the learning method, the learning techniques utilized for wafer defect identification are divided into supervised machine learning, unsupervised machine learning, hybrid learning, semi-supervised learning, and transfer learning; according to the network category, deep neural networks for wafer defect detection is divided into detection network, classification network, segmentation network, and combination network. The detection performance and advantages and disadvantages of each method are compared in depth in each section. From the collected literature, it indicates that research on wafer defect detection based on transformer is increasing year by year. The large datasets shared in this field mainly include WM-811K and MixedWM38. Most of the datasets are not open to the public and are maintained and updated by teams or companies. Representative evaluation indicators mainly include single-label indicators of supervised learning/semi-supervised learning (accuracy, precision, recall, F1 score) and multi-label indicators (micro-precision, micro-recall, exact match ratio, Hamming loss). Unsupervised learning indicators include Rand index, adjusted Rand index, normalized mutual information, adjusted mutual information, and purity. Finally, the existing problems of the current vision-based wafer defect detection are identified, namely, low data availability, serious class imbalance problem, high computational complexity, and limited research on mixed-type defects. Future development trends include multimodal and non-destructive detection techniques and more efficient feature representation learning.关键词:semiconductor wafer;defect inspection;machine vision;deep neural network(DNN);machine learning;wafer defect dataset377|156|0更新时间:2025-04-11

摘要:The integrated circuit chip is developed on a semiconductor wafer substrate and serves as a fundamental electronic component in many electronic devices. The semiconductor wafer, which is the foundation for manufacturing integrated circuits, contains billions of tiny electronic components. Production is gradually moving toward high-performance and small-sized directions because of the semiconductor industry’s demand for high-quality wafers. In the manufacturing process of wafers, unexpected structures may remain due to environmental, operational, and process-related reasons, leading to a decrease in the operational performance of the wafer chip. These unforeseen structures are referred to as wafer defects. The causes of wafer defects are diverse, with common types including scratches, pits, chemical stains, and dust contamination. The occurrence of unpredictable, mixed, and complex defects can result in increased production costs, decreased product yield, and a decline in manufacturing process stability. Therefore, defect detection on semiconductor wafers is crucial to ensure their performance and improve the yield of high-quality products. Currently, methods for detecting surface defects on semiconductor wafers include manual visual inspection, optical inspection, ultrasonic testing, and machine vision detection. Manual visual inspection involves operators using microscopes or the naked eye to check for obvious defects on the wafer surface, but this method has low efficiency and accuracy for detecting small defects. Optical inspection uses light sources to illuminate the wafer surface and determines the presence of defects by detecting the intensity and shape of reflected and transmitted light. This non-contact method is suitable for large-area defect detection but is less effective for detecting small and shallow defects and cannot identify internal defects within the wafer. Ultrasonic testing utilizes the propagation speed of ultrasonic waves in the wafer, along with the reflection and scattering characteristics of defects such as cracks and voids, but it is sensitive to material density and sound speed, leading to reduced detection effectiveness in specific material. In addition to these three methods, machine vision detection uses image processing and computer vision technology to detect defects on the wafer surface. It is a non-contact, high-speed, automated, cost-effective, and scalable approach, making machine vision-based detection the primary method in the wafer defect detection field today. With the development of computer vision, machine learning, and especially deep learning, the performance of defect identification and classification algorithms for semiconductor wafers has been further enhanced. This not only improves detection efficiency but also reduces production costs and enhances product quality. Machine vision-based detection methods generally fall into three categories: traditional image processing methods, machine learning methods, and deep learning methods. The development of many emerging technology fields today requires the support of integrated circuit technology. As a key role in integrated circuit chips, semiconductor wafers are prone to various defects due to complex manufacturing processes, and their failures will greatly affect the final performance of the chip and increase costs. Therefore, defect detection of semiconductor wafers is an important means to ensure its yield and productivity. Wafer defect detection combined with machine vision algorithms has strong universality and high speed and can better meet the relevant needs of industrial inspection. To have a deeper understanding of the research status of wafer defect detection, this paper conducts a comprehensive and thorough research on the defect detection method based on machine vision. Wafer manufacturing, its surface defect detection process, and the classification of wafer defects are introduced. The detection methods based on traditional image processing, machine learning, and deep learning algorithms are fully explored. Detection methods based on traditional image processing are divided according to the spatial domain and the transform domain. Detection methods based on the spatial domain include template matching, edge gradient, optical flow method, and spatial filtering method and are divided according to the correlation between the defect pattern and the template or the dissimilarity of the background. Detection methods based on the transform domain include wavelet transform, frequency filtering, and Fourier transform. Further research is being conducted on methods based on Fourier transform in the wavelet transform domain. It focuses on the application of machine learning and deep neural network in wafer defect detection. According to the learning method, the learning techniques utilized for wafer defect identification are divided into supervised machine learning, unsupervised machine learning, hybrid learning, semi-supervised learning, and transfer learning; according to the network category, deep neural networks for wafer defect detection is divided into detection network, classification network, segmentation network, and combination network. The detection performance and advantages and disadvantages of each method are compared in depth in each section. From the collected literature, it indicates that research on wafer defect detection based on transformer is increasing year by year. The large datasets shared in this field mainly include WM-811K and MixedWM38. Most of the datasets are not open to the public and are maintained and updated by teams or companies. Representative evaluation indicators mainly include single-label indicators of supervised learning/semi-supervised learning (accuracy, precision, recall, F1 score) and multi-label indicators (micro-precision, micro-recall, exact match ratio, Hamming loss). Unsupervised learning indicators include Rand index, adjusted Rand index, normalized mutual information, adjusted mutual information, and purity. Finally, the existing problems of the current vision-based wafer defect detection are identified, namely, low data availability, serious class imbalance problem, high computational complexity, and limited research on mixed-type defects. Future development trends include multimodal and non-destructive detection techniques and more efficient feature representation learning.关键词:semiconductor wafer;defect inspection;machine vision;deep neural network(DNN);machine learning;wafer defect dataset377|156|0更新时间:2025-04-11 - “在海洋强国战略背景下,中国专家全面梳理了水下图像清晰化方法,为解决水下机器视觉感知难题提供解决方案。”

摘要:Since the inception of the marine power strategy, there has been an increasing focus on an investigation into the quality of underwater images in the marine environment. However, unlike images captured in favorable terrestrial conditions, light propagation underwater is influenced by the absorption and scattering of the underwater medium. Light absorption can result in color distortion, reduced contrast, and diminished brightness in underwater images, while light scattering may cause haziness, loss of details, and noise amplification. The challenge posed by low-quality underwater images hinders effective machine vision in underwater environments. Therefore, researching effective methods for enhancing underwater machine vision has become a critical issue in the current field of underwater vision. This topic holds significant theoretical and practical significance for strengthening marine technological capabilities and promoting the sustained and healthy development of the marine economy. This paper provides a comprehensive overview of existing underwater image clarification methods, highlighting the strengths and disadvantages of each approach. For instance, image restoration based on methods relies on prior assumptions, but an excess of prior knowledge can result in difficulties with multi-parameter optimization and sensitivity to robustness. Meanwhile, image enhancement based on methods only considers the pixel information of the image and does not consider the imaging model, thereby risking noise amplification and local over-enhancement. Consequently, designing simple yet effective methods for underwater image clarification is crucial for improving the quality of underwater images. This paper provides a comprehensive overview of methods to enhance the quality of underwater images through an extensive exploration of image restoration and image enhancement techniques. It concludes with a summary of the methods and their merits and demerits. With regard to image restoration, the methods are categorized into four types: underwater optical imaging, polarization characteristics, prior knowledge, and deep learning. Optical imaging methods primarily consider the optical properties of the water itself, accounting for phenomena such as light attenuation, scattering, and absorption in the underwater environment. These methods rely on physical optical models to characterize underwater light propagation. Polarization characteristic methods involve collecting polarized images from the same scene, separating background light and scattered light, estimating light intensity and transmittance, and inversely obtaining clarified images. Prior methods guide image processing through prior information, and deep learning methods utilize deep neural network models to restore underwater images. For image enhancement-based methods, the overview includes frequency-domain, spatial-domain, color constancy, fusion-based, and deep learning methods. Frequency-domain methods process underwater images through convolution or spatial transformations to achieve enhancement. Spatial-domain methods directly act on image pixels, altering their intrinsic characteristics through techniques such as grayscale mapping, effectively improving image contrast and detail. Color constancy methods enhance images by leveraging color consistency present in the image. Fusion methods apply multiple algorithms to a single input image, generating enhanced versions. Subsequently, fusion weights are calculated for these enhanced images, and the final enhanced image is generated through image fusion. Regarding deep learning-based methods, the summary covers convolutional neural network (CNN)-based and generative adversarial network (GAN)-based approaches. The former employs CNNs to enhance underwater images by learning image features, structure, and deep network processing, whereas the latter utilizes generator and discriminator components in a GAN to enhance and restore underwater images. The paper then delves into a detailed discussion of each method’s innovations, advantages, and limitations, summarizing the above methods comprehensively. Additionally, several commonly used underwater datasets are introduced, and a qualitative and quantitative analysis is conducted on representative clarity methods. This paper provides a comprehensive overview and summary of the degradation issues in underwater images, methods for underwater image clarification, underwater image datasets, and underwater image quality assessment. We selected 11 classical underwater image clarity methods and tested them on standard underwater datasets. We compared and analyzed these methods using five quantitative evaluation metrics. Through qualitative and quantitative comparative analyses, we summarized the strengths and weaknesses of these representative clarity methods and underwater image quality assessment methods, better understanding the current research status in underwater image clarification and outlining future development prospects. This study offers a comprehensive review of methods aimed at enhancing and restoring underwater images. It underscores the significance of enhancing image quality and underscores the scientific and economic potential of underwater image clarification methods in applications such as marine resource development. The study serves as a valuable guide for future research and practices in related fields.关键词:underwater image quality degradation;scattering and absorption of light;underwater image clarity;underwater image quality evaluation407|324|0更新时间:2025-04-11

摘要:Since the inception of the marine power strategy, there has been an increasing focus on an investigation into the quality of underwater images in the marine environment. However, unlike images captured in favorable terrestrial conditions, light propagation underwater is influenced by the absorption and scattering of the underwater medium. Light absorption can result in color distortion, reduced contrast, and diminished brightness in underwater images, while light scattering may cause haziness, loss of details, and noise amplification. The challenge posed by low-quality underwater images hinders effective machine vision in underwater environments. Therefore, researching effective methods for enhancing underwater machine vision has become a critical issue in the current field of underwater vision. This topic holds significant theoretical and practical significance for strengthening marine technological capabilities and promoting the sustained and healthy development of the marine economy. This paper provides a comprehensive overview of existing underwater image clarification methods, highlighting the strengths and disadvantages of each approach. For instance, image restoration based on methods relies on prior assumptions, but an excess of prior knowledge can result in difficulties with multi-parameter optimization and sensitivity to robustness. Meanwhile, image enhancement based on methods only considers the pixel information of the image and does not consider the imaging model, thereby risking noise amplification and local over-enhancement. Consequently, designing simple yet effective methods for underwater image clarification is crucial for improving the quality of underwater images. This paper provides a comprehensive overview of methods to enhance the quality of underwater images through an extensive exploration of image restoration and image enhancement techniques. It concludes with a summary of the methods and their merits and demerits. With regard to image restoration, the methods are categorized into four types: underwater optical imaging, polarization characteristics, prior knowledge, and deep learning. Optical imaging methods primarily consider the optical properties of the water itself, accounting for phenomena such as light attenuation, scattering, and absorption in the underwater environment. These methods rely on physical optical models to characterize underwater light propagation. Polarization characteristic methods involve collecting polarized images from the same scene, separating background light and scattered light, estimating light intensity and transmittance, and inversely obtaining clarified images. Prior methods guide image processing through prior information, and deep learning methods utilize deep neural network models to restore underwater images. For image enhancement-based methods, the overview includes frequency-domain, spatial-domain, color constancy, fusion-based, and deep learning methods. Frequency-domain methods process underwater images through convolution or spatial transformations to achieve enhancement. Spatial-domain methods directly act on image pixels, altering their intrinsic characteristics through techniques such as grayscale mapping, effectively improving image contrast and detail. Color constancy methods enhance images by leveraging color consistency present in the image. Fusion methods apply multiple algorithms to a single input image, generating enhanced versions. Subsequently, fusion weights are calculated for these enhanced images, and the final enhanced image is generated through image fusion. Regarding deep learning-based methods, the summary covers convolutional neural network (CNN)-based and generative adversarial network (GAN)-based approaches. The former employs CNNs to enhance underwater images by learning image features, structure, and deep network processing, whereas the latter utilizes generator and discriminator components in a GAN to enhance and restore underwater images. The paper then delves into a detailed discussion of each method’s innovations, advantages, and limitations, summarizing the above methods comprehensively. Additionally, several commonly used underwater datasets are introduced, and a qualitative and quantitative analysis is conducted on representative clarity methods. This paper provides a comprehensive overview and summary of the degradation issues in underwater images, methods for underwater image clarification, underwater image datasets, and underwater image quality assessment. We selected 11 classical underwater image clarity methods and tested them on standard underwater datasets. We compared and analyzed these methods using five quantitative evaluation metrics. Through qualitative and quantitative comparative analyses, we summarized the strengths and weaknesses of these representative clarity methods and underwater image quality assessment methods, better understanding the current research status in underwater image clarification and outlining future development prospects. This study offers a comprehensive review of methods aimed at enhancing and restoring underwater images. It underscores the significance of enhancing image quality and underscores the scientific and economic potential of underwater image clarification methods in applications such as marine resource development. The study serves as a valuable guide for future research and practices in related fields.关键词:underwater image quality degradation;scattering and absorption of light;underwater image clarity;underwater image quality evaluation407|324|0更新时间:2025-04-11

Review

- “最新研究提出了一种小波非均匀扩散模型,有效去除图像阴影,恢复颜色亮度和细节,提升图像质量。”

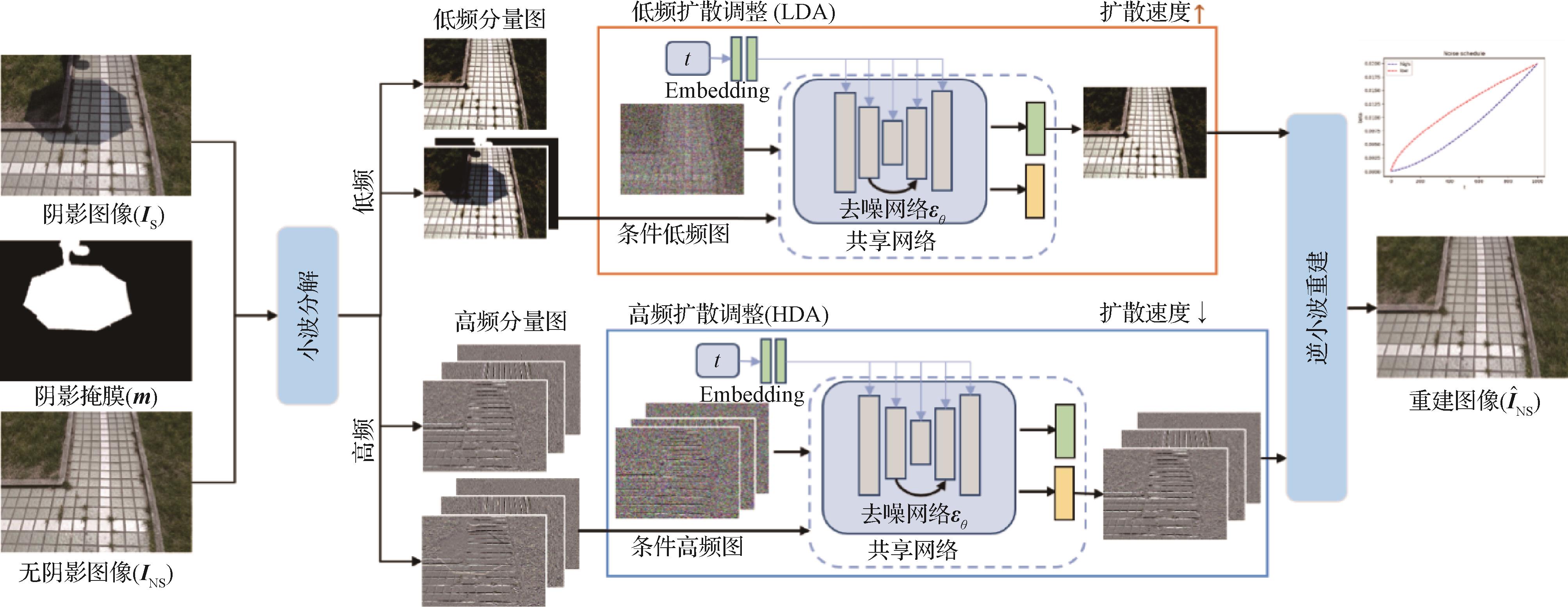

摘要:ObjectiveShadows are a common occurrence in optical images captured under partial or complete obstruction of light. In such images, shadow regions typically exhibit various forms of degradation, such as low contrast, color distortion, and loss of scene structure. Shadows not only impact the visual perception of humans but also impose constraints on the implementation of numerous sophisticated computer vision algorithms. Shadow removal can assist in many computer vision tasks. It aims to enhance the visibility of shadow regions in images and achieve consistent illumination distribution between shadow and non-shadow regions. Currently, deep learning-based shadow removal methods can be roughly divided into two categories. One typically utilizes deep learning to minimize the pixel-level differences between shadow regions and their corresponding non-shadow regions, aiming to learn deterministic mapping relationships between shadow and non-shadow images. However, the primary focus of shadow removal lies in locally restoring shadow regions, often overlooking the essential constraints required for effectively restoring boundaries between shadow and non-shadow regions. As a result, discrepancies in brightness exist between the restored shadow and non-shadow areas, along with the emergence of artifacts along the boundaries. Another approach involves using image generation models to directly model the complex distribution of shadow-free images, avoiding the direct learning of pixel-level mapping relationships, and treating shadow removal as a conditional generation task. While diffusion models have garnered significant attention due to their powerful generation capabilities, most existing diffusion generation models suffer from issues such as time-consuming image restoration and sensitivity to resolution when recovering images. Inspired by these challenges, a wavelet non-uniform diffusion model (WNDM) is proposed, which combines the advantages of wavelet decomposition and the generation ability of diffusion models to solve the above problems.MethodFirst, the image is decomposed into low-frequency and high-frequency components via wavelet decomposition. Then, diffusion generation networks are designed separately for low-frequency and high-frequency components to reconstruct the wavelet domain distribution of shadow-free images and restore various degraded information within these components, such as low-frequency (color, brightness) and high-frequency details. Wavelet transform can decompose the image into high-frequency and low-frequency images without sacrificing information, and the spatial size of the decomposed images is halved. Thus, modeling diffusion in the wavelet domain not only greatly accelerates model inference but also captures information that may be lost in the pixel domain. Furthermore, considering the complexity of the distribution of low-frequency and high-frequency components and their sensitivity to noise, for example, high-frequency components exhibit sparsity, making it easier for neural networks to learn their features. Hence, this study devises two separate adaptive diffusion noise scheduling tables tailored for low-frequency and high-frequency components. The branch for low-frequency diffusion adjustment independently fine-tunes the low-frequency information within shadow images, whereas the branch for high-frequency diffusion adjustment independently refines the high-frequency information within shadow images, resulting in the generation of more precise low-frequency and high-frequency images, respectively. Additionally, the low-frequency and high-frequency diffusion adjustment branches are consolidated to share a denoising network, thus streamlining model complexity and optimizing computational resources. The difference lies in the design of two prediction branches in the final layer of this network. These branches consist of several stacked convolution blocks, each predicting the low-frequency and high-frequency components of the shadow-free image, respectively. Finally, high-quality shadow-free images are reconstructed using inverse wavelet transform.ResultThe experiments were conducted on three shadow removal datasets for training and testing. On the shadow removal dataset (SRD) dataset, comparisons were made with nine state-of-the-art shadow removal algorithms. The proposed model achieved the best or second-best results in terms of peak signal-to-noise ratio (PSNR), structural similarity index (SSIM), and root mean square error (RMSE) in both non-shadow regions and the entire image. On the dataset with image shadow triplets dataset (ISTD), the performance was the best in non-shadow regions, with improvements of 0.36 dB in PSNR, 0.004 in SSIM, and 0.04 in RMSE compared with the second-best model. It ranked second in performance across all metrics for the entire image. On the augmented dataset with image shadow triplets (ISTD+), compared with six state-of-the-art shadow removal algorithms, the performance was the best in non-shadow regions, with improvements of 0.47 dB in PSNR and 0.1 in RMSE. Additionally, regarding the advanced shadow removal diffusion model ShadowDiffusion, the RMSE for the entire image was 3.63 on the SRD dataset when generating images of 256 × 256 pixels resolution. However, a significant performance drop occurred when generating images of the original resolution of 840 × 640 pixels, with RMSE increasing to 7.19. By contrast, our approach yielded RMSE values of 3.80 and 4.06 for images of dimensions 256 × 256 pixels and 840 × 640 pixels, respectively, showcasing consistent performance. Additionally, the time required to generate a single original image of size 840 × 640 pixels was reduced by roughly fourfold compared with ShadowDiffusion. Furthermore, our method was expanded to address image raindrop removal tasks, delivering competitive results on the RainDrop dataset.ConclusionIn this paper, the proposed method accelerates the sampling time of the diffusion model. While removing shadows, it restores missing color, brightness, and rich details in shadow regions. It treats shadow removal as an image generation task in the wavelet domain and designs two adaptive diffusion flows for the low-frequency and high-frequency components of the image wavelet domain to address the degradation of low-frequency (color, brightness) and high-frequency detail information caused by shadow images. Benefiting from the frequency decomposition of the wavelet transform, WNDM does not learn from the entangled pixel space domain but effectively separates and trains them separately, thereby generating more refined low-frequency and high-frequency information for reconstructing the final image. Extensive experiments on multiple datasets demonstrate the effectiveness of WNDM, achieving competitive results compared with state-of-the-art methods.关键词:shadow removal;diffusion model(DM);wavelet transform;dual-branch network;noise schedule189|129|0更新时间:2025-04-11

摘要:ObjectiveShadows are a common occurrence in optical images captured under partial or complete obstruction of light. In such images, shadow regions typically exhibit various forms of degradation, such as low contrast, color distortion, and loss of scene structure. Shadows not only impact the visual perception of humans but also impose constraints on the implementation of numerous sophisticated computer vision algorithms. Shadow removal can assist in many computer vision tasks. It aims to enhance the visibility of shadow regions in images and achieve consistent illumination distribution between shadow and non-shadow regions. Currently, deep learning-based shadow removal methods can be roughly divided into two categories. One typically utilizes deep learning to minimize the pixel-level differences between shadow regions and their corresponding non-shadow regions, aiming to learn deterministic mapping relationships between shadow and non-shadow images. However, the primary focus of shadow removal lies in locally restoring shadow regions, often overlooking the essential constraints required for effectively restoring boundaries between shadow and non-shadow regions. As a result, discrepancies in brightness exist between the restored shadow and non-shadow areas, along with the emergence of artifacts along the boundaries. Another approach involves using image generation models to directly model the complex distribution of shadow-free images, avoiding the direct learning of pixel-level mapping relationships, and treating shadow removal as a conditional generation task. While diffusion models have garnered significant attention due to their powerful generation capabilities, most existing diffusion generation models suffer from issues such as time-consuming image restoration and sensitivity to resolution when recovering images. Inspired by these challenges, a wavelet non-uniform diffusion model (WNDM) is proposed, which combines the advantages of wavelet decomposition and the generation ability of diffusion models to solve the above problems.MethodFirst, the image is decomposed into low-frequency and high-frequency components via wavelet decomposition. Then, diffusion generation networks are designed separately for low-frequency and high-frequency components to reconstruct the wavelet domain distribution of shadow-free images and restore various degraded information within these components, such as low-frequency (color, brightness) and high-frequency details. Wavelet transform can decompose the image into high-frequency and low-frequency images without sacrificing information, and the spatial size of the decomposed images is halved. Thus, modeling diffusion in the wavelet domain not only greatly accelerates model inference but also captures information that may be lost in the pixel domain. Furthermore, considering the complexity of the distribution of low-frequency and high-frequency components and their sensitivity to noise, for example, high-frequency components exhibit sparsity, making it easier for neural networks to learn their features. Hence, this study devises two separate adaptive diffusion noise scheduling tables tailored for low-frequency and high-frequency components. The branch for low-frequency diffusion adjustment independently fine-tunes the low-frequency information within shadow images, whereas the branch for high-frequency diffusion adjustment independently refines the high-frequency information within shadow images, resulting in the generation of more precise low-frequency and high-frequency images, respectively. Additionally, the low-frequency and high-frequency diffusion adjustment branches are consolidated to share a denoising network, thus streamlining model complexity and optimizing computational resources. The difference lies in the design of two prediction branches in the final layer of this network. These branches consist of several stacked convolution blocks, each predicting the low-frequency and high-frequency components of the shadow-free image, respectively. Finally, high-quality shadow-free images are reconstructed using inverse wavelet transform.ResultThe experiments were conducted on three shadow removal datasets for training and testing. On the shadow removal dataset (SRD) dataset, comparisons were made with nine state-of-the-art shadow removal algorithms. The proposed model achieved the best or second-best results in terms of peak signal-to-noise ratio (PSNR), structural similarity index (SSIM), and root mean square error (RMSE) in both non-shadow regions and the entire image. On the dataset with image shadow triplets dataset (ISTD), the performance was the best in non-shadow regions, with improvements of 0.36 dB in PSNR, 0.004 in SSIM, and 0.04 in RMSE compared with the second-best model. It ranked second in performance across all metrics for the entire image. On the augmented dataset with image shadow triplets (ISTD+), compared with six state-of-the-art shadow removal algorithms, the performance was the best in non-shadow regions, with improvements of 0.47 dB in PSNR and 0.1 in RMSE. Additionally, regarding the advanced shadow removal diffusion model ShadowDiffusion, the RMSE for the entire image was 3.63 on the SRD dataset when generating images of 256 × 256 pixels resolution. However, a significant performance drop occurred when generating images of the original resolution of 840 × 640 pixels, with RMSE increasing to 7.19. By contrast, our approach yielded RMSE values of 3.80 and 4.06 for images of dimensions 256 × 256 pixels and 840 × 640 pixels, respectively, showcasing consistent performance. Additionally, the time required to generate a single original image of size 840 × 640 pixels was reduced by roughly fourfold compared with ShadowDiffusion. Furthermore, our method was expanded to address image raindrop removal tasks, delivering competitive results on the RainDrop dataset.ConclusionIn this paper, the proposed method accelerates the sampling time of the diffusion model. While removing shadows, it restores missing color, brightness, and rich details in shadow regions. It treats shadow removal as an image generation task in the wavelet domain and designs two adaptive diffusion flows for the low-frequency and high-frequency components of the image wavelet domain to address the degradation of low-frequency (color, brightness) and high-frequency detail information caused by shadow images. Benefiting from the frequency decomposition of the wavelet transform, WNDM does not learn from the entangled pixel space domain but effectively separates and trains them separately, thereby generating more refined low-frequency and high-frequency information for reconstructing the final image. Extensive experiments on multiple datasets demonstrate the effectiveness of WNDM, achieving competitive results compared with state-of-the-art methods.关键词:shadow removal;diffusion model(DM);wavelet transform;dual-branch network;noise schedule189|129|0更新时间:2025-04-11 - “在图像处理领域,专家提出了一种解耦合三阶段增强网络,有效去除非均质雾霾图像中的雾霾并还原真实细节信息和颜色。”

摘要:ObjectiveThe absorption or scattering effect of microscopic particles in the atmosphere, such as aerosols, soot, and haze, will reduce image contrast, blur image details, and cause color distortion. These problems can decrease the accuracy of subsequent advanced computer vision tasks, such as object detection and image segmentation. Therefore, image dehazing has attracted increasing attention, and various image dehazing methods have been proposed. The ultimate goal of image dehazing is to recover a haze-free image from the input hazy image. At present, existing image dehazing algorithms can be divided into two categories: traditional dehazing algorithms based on image prior and image dehazing algorithms based on deep learning. The image priori-based dehazing algorithm uses the prior information and empirical rules of the image itself to estimate the transmittance map and atmospheric light value, and it utilizes the atmospheric scattering model to realize the image dehazing process. This approach can improve the contrast of the image to a certain extent but easily leads to excessive enhancement or color distortions in the dehazed results. Driven by a large amount of image data, the image dehazing algorithm based on deep learning can flexibly learn the mapping from hazy image to haze-free images by directly constructing an efficient convolutional neural network and obtain dehazed effects with better generalization performance and human visual perception. However, because of domain differences, the image dehazing algorithm trained on the synthesized homogeneous haze dataset usually has difficulty achieving satisfactory results on heterogeneous hazy images in the real world.MethodHaze will reduce the contrast of the image and make it look blurry. Thus, we train the network (i.e., the contrast enhancement module) with the brightness map of the hazy image and the brightness map corresponding to the clear image as the training image pairs, which effectively enhances the contrast of the brightness map and obtains the brightness enhancement map with a clear image structure and details. Furthermore, we calculate the gradient differences of the brightness maps before and after the contrast enhancement process and estimate the haze density information in the hazy images to guide saturation enhancement of the hazy images. Therefore, we propose an end-to-end decoupled triple-stage enhancement network for the heterogeneous haze dehazing task, which decouples the input hazy image with color space conversion into three channels, i.e., brightness, saturation, hues. Our algorithm first enhances the contrast of the brightness map through the contrast enhancement module so that the dehazed result holds clear structure and detail information. Then, it enhances the saturation channel of the image through the saturation enhancement module so that the dehazed result takes on a more vivid color. Finally, the color correction and enhancement module is used to fine-tune the overall color of the image so that the final dehazed result will be more in line with human visual perception. In particular, we design a haze density coding matrix in the saturation enhancement module and estimate the haze density information of the hazy image by calculating the gradient differences of the brightness maps before and after the contrast enhancement process. This step will provide guidance for the saturation enhancement module to ensure the accuracy of saturation recovery. The U-Net network structure exhibits superior performance in image enhancement tasks. Thus, we choose U-Net as the backbone network of our contrast and saturation enhancement modules and obtain multi-scale information of images through the encoder and decoder structure for better dehazing results. For the color correction and enhancement module, we only need to fine-tune the previously enhanced image results, which is why we only use a simple network with convolutional layers and skip connections to prevent the loss of image information with upsampling and downsampling operations.ResultCompared with the second best-performing model in performance, the average peak signal-to-noise ratio is increased by 8.5 dB and the average structural similarity is increased by 0.12. Our perceived fog density prediction value is 0.47 and the estimated haze density is 0.21 in the real-world dataset, both of which rank first. In the SOTS dataset, our average peak signal-to-noise ratio is 16.52 dB and the average structural similarity is 0.80, which are comparable to the existing algorithms in terms of human visual perception.ConclusionThrough a series of subjective and objective experimental comparisons, the experimental results show that our algorithm has excellent processing ability for non-homogeneous hazy images and can effectively restore the real details and colors of hazy images.关键词:deep learning;non-homogeneous image dehazing;saturation enhancement;contrast enhancement;triple-stage enhancement152|171|0更新时间:2025-04-11

摘要:ObjectiveThe absorption or scattering effect of microscopic particles in the atmosphere, such as aerosols, soot, and haze, will reduce image contrast, blur image details, and cause color distortion. These problems can decrease the accuracy of subsequent advanced computer vision tasks, such as object detection and image segmentation. Therefore, image dehazing has attracted increasing attention, and various image dehazing methods have been proposed. The ultimate goal of image dehazing is to recover a haze-free image from the input hazy image. At present, existing image dehazing algorithms can be divided into two categories: traditional dehazing algorithms based on image prior and image dehazing algorithms based on deep learning. The image priori-based dehazing algorithm uses the prior information and empirical rules of the image itself to estimate the transmittance map and atmospheric light value, and it utilizes the atmospheric scattering model to realize the image dehazing process. This approach can improve the contrast of the image to a certain extent but easily leads to excessive enhancement or color distortions in the dehazed results. Driven by a large amount of image data, the image dehazing algorithm based on deep learning can flexibly learn the mapping from hazy image to haze-free images by directly constructing an efficient convolutional neural network and obtain dehazed effects with better generalization performance and human visual perception. However, because of domain differences, the image dehazing algorithm trained on the synthesized homogeneous haze dataset usually has difficulty achieving satisfactory results on heterogeneous hazy images in the real world.MethodHaze will reduce the contrast of the image and make it look blurry. Thus, we train the network (i.e., the contrast enhancement module) with the brightness map of the hazy image and the brightness map corresponding to the clear image as the training image pairs, which effectively enhances the contrast of the brightness map and obtains the brightness enhancement map with a clear image structure and details. Furthermore, we calculate the gradient differences of the brightness maps before and after the contrast enhancement process and estimate the haze density information in the hazy images to guide saturation enhancement of the hazy images. Therefore, we propose an end-to-end decoupled triple-stage enhancement network for the heterogeneous haze dehazing task, which decouples the input hazy image with color space conversion into three channels, i.e., brightness, saturation, hues. Our algorithm first enhances the contrast of the brightness map through the contrast enhancement module so that the dehazed result holds clear structure and detail information. Then, it enhances the saturation channel of the image through the saturation enhancement module so that the dehazed result takes on a more vivid color. Finally, the color correction and enhancement module is used to fine-tune the overall color of the image so that the final dehazed result will be more in line with human visual perception. In particular, we design a haze density coding matrix in the saturation enhancement module and estimate the haze density information of the hazy image by calculating the gradient differences of the brightness maps before and after the contrast enhancement process. This step will provide guidance for the saturation enhancement module to ensure the accuracy of saturation recovery. The U-Net network structure exhibits superior performance in image enhancement tasks. Thus, we choose U-Net as the backbone network of our contrast and saturation enhancement modules and obtain multi-scale information of images through the encoder and decoder structure for better dehazing results. For the color correction and enhancement module, we only need to fine-tune the previously enhanced image results, which is why we only use a simple network with convolutional layers and skip connections to prevent the loss of image information with upsampling and downsampling operations.ResultCompared with the second best-performing model in performance, the average peak signal-to-noise ratio is increased by 8.5 dB and the average structural similarity is increased by 0.12. Our perceived fog density prediction value is 0.47 and the estimated haze density is 0.21 in the real-world dataset, both of which rank first. In the SOTS dataset, our average peak signal-to-noise ratio is 16.52 dB and the average structural similarity is 0.80, which are comparable to the existing algorithms in terms of human visual perception.ConclusionThrough a series of subjective and objective experimental comparisons, the experimental results show that our algorithm has excellent processing ability for non-homogeneous hazy images and can effectively restore the real details and colors of hazy images.关键词:deep learning;non-homogeneous image dehazing;saturation enhancement;contrast enhancement;triple-stage enhancement152|171|0更新时间:2025-04-11

Image Processing and Coding

- “在图像识别领域,专家提出了BSGAN-GP模型,有效提升了少数类识别准确度,为深度学习图像识别提供新方案。”

摘要:ObjectiveImage classification technology has realized high-precision automatic classification and screening of digital images with the improvement of algorithm performance and the development of computer hardware. This technology uses a computer to conduct a quantitative analysis of the image, classifying each area in the image or image into one of several categories to replace human visual interpretation. However, in practice, a large number of training samples and high-quality annotation information are required for high-quality training to obtain high-accuracy classification results. For large-scale image datasets, existing image annotation methods need to be performed manually by industry experts, such as polygon annotation and key point annotation. As a result of the high cost of expert annotation and the difficulty of high-quality annotation, less image data are labeled, thus seriously hindering the development of deep learning in computer vision. To this end, the semi-supervised generative adversarial network (GAN) paradigm is proposed because it can use a large amount of unlabeled data to obtain the distribution characteristics of real samples in the feature space and more accurately determine the classification boundaries. The generative semi-supervised GAN model, such as DCGAN and semi-supervised GAN, can create new samples and increase sample diversity, thus being more widely used in various fields. However, this model is often unstable in adversarial training; especially on an unbalanced dataset, the gradient can easily fall into the trap of predicting most of the data. Image datasets in real-world industrial applications are often category-unbalanced, which is why this imbalance negatively affects the accuracy of mining classifiers. Several recent studies have revealed the effectiveness of GAN, such as DAGAN, BSSGAN, BAGAN, and improve-BAGAN, in alleviating the problem of imbalance. Among them, BAGAN acts as an enhancement method to recover the balance in unbalanced datasets, which can learn useful features from most classes and use these features to generate images for minority classes. However, the experimental results show that its encoder lost many details in the image reconstruction process, making the appearance of similar categories not easy to distinguish in the reconstructed figures. Improve-BAGAN improves the BAGAN, and increasing the gradient penalty makes the model training more stable. Improve-BAGAN is the state-of-the-art achievement of existing supervised learning to solve unbalanced problems, but achieving the expected results of the model requires manual labeling of a sufficient number of samples, which greatly increases the labor and time costs.MethodIn this study, a new balanced image recognition model based on semi-supervised GAN is established, enabling the discriminator of semi-supervised GAN to fairly identify every class of unbalanced dataset. The proposed balanced image recognition model BSGAN-GP consists of two components: the category balancing random selection (CBRS) algorithm and the discriminator for adding gradient penalty. For the brand-new CBRS algorithm, we randomly selected the label data in the real data by category so that the number of labels in each class in the input model is consistent, ensuring a balance between the real sample and the generator synthesis sample. Then, we conduct confrontation training, and the generator NetG with fixed parameters generates the same number of false sample input discriminators for each class. We then update the discriminator NetD to ensure that the discriminator can fairly judge all classes to improve the identification accuracy of the minority classes. BSGAN-GP adds an additional gradient penalty item in the discriminator loss function to stabilize the model training. The optimizer selected for the experiment was the Adam algorithm, with the learning rate set to 0.000 2 and the momentum set to (0.5, 0.9). The batch size for all three datasets was 100, where the MNIST and Fashion datasets were set to 1 000, or 100 per class and 5 000 for SVHN, or 500 per class. The experiment used an RTX 4090 GPU and 24 GB of memory. Most studies in the experiment were completed within 4 500 s. For MNIST and Fashion-MNIST, we trained 25 epochs, each of which took 85 s and 108 s, respectively, on our device. For the SVHN, we trained 30 epochs, with each epoch requiring 110 s on our device.ResultThe experiment is compared with six semi-supervised methods and three fully supervised parties in the three mainstream datasets. An unbalanced version of the three datasets is developed to prove the improved identification accuracy of a few classes. The experimental indicators include overall accuracy, category recognition rate, confusion matrix, and synthesized images. In the unbalanced Fashion-MNIST, compared with the semi-supervised GAN, the overall accuracy value increased by 3.281%, and the minority class recognition rate increased by 7.14%. In the unbalanced MNIST, the recognition rate of the corresponding four minority classes increased by 2.68% to 7.40% compared with the semi-supervised GAN. In the SVHN, the overall accuracy value increased by 3.515% compared with the semi-supervised GAN. Quality comparison of synthetic images was also conducted in three datasets to verify the effectiveness of the CBRS algorithm, and the improvement of synthetic images on the quantity and quality of a few classes proved its effect. Ablation experiments evaluate the importance of the proposed module CBRS versus the introduced module in the network. The CBRS module improved the overall accuracy of the model by 2% to 3%, and the GP module improved the overall accuracy of the model by 0.8% to 1.8%.ConclusionIn this study, we propose a new algorithm called CBRS to achieve fair recognition of all classes in unbalanced datasets. We introduced a gradient penalty into the discriminator of semi-supervised GANs for more stable training. Experiment results indicate that CBRS can achieve fairer image recognition and higher-quality synthesized image results.关键词:deep learning;semi-supervised learning(SSL);generative adversarial network(GAN);unbalanced image recognition;gradient punishment141|142|0更新时间:2025-04-11

摘要:ObjectiveImage classification technology has realized high-precision automatic classification and screening of digital images with the improvement of algorithm performance and the development of computer hardware. This technology uses a computer to conduct a quantitative analysis of the image, classifying each area in the image or image into one of several categories to replace human visual interpretation. However, in practice, a large number of training samples and high-quality annotation information are required for high-quality training to obtain high-accuracy classification results. For large-scale image datasets, existing image annotation methods need to be performed manually by industry experts, such as polygon annotation and key point annotation. As a result of the high cost of expert annotation and the difficulty of high-quality annotation, less image data are labeled, thus seriously hindering the development of deep learning in computer vision. To this end, the semi-supervised generative adversarial network (GAN) paradigm is proposed because it can use a large amount of unlabeled data to obtain the distribution characteristics of real samples in the feature space and more accurately determine the classification boundaries. The generative semi-supervised GAN model, such as DCGAN and semi-supervised GAN, can create new samples and increase sample diversity, thus being more widely used in various fields. However, this model is often unstable in adversarial training; especially on an unbalanced dataset, the gradient can easily fall into the trap of predicting most of the data. Image datasets in real-world industrial applications are often category-unbalanced, which is why this imbalance negatively affects the accuracy of mining classifiers. Several recent studies have revealed the effectiveness of GAN, such as DAGAN, BSSGAN, BAGAN, and improve-BAGAN, in alleviating the problem of imbalance. Among them, BAGAN acts as an enhancement method to recover the balance in unbalanced datasets, which can learn useful features from most classes and use these features to generate images for minority classes. However, the experimental results show that its encoder lost many details in the image reconstruction process, making the appearance of similar categories not easy to distinguish in the reconstructed figures. Improve-BAGAN improves the BAGAN, and increasing the gradient penalty makes the model training more stable. Improve-BAGAN is the state-of-the-art achievement of existing supervised learning to solve unbalanced problems, but achieving the expected results of the model requires manual labeling of a sufficient number of samples, which greatly increases the labor and time costs.MethodIn this study, a new balanced image recognition model based on semi-supervised GAN is established, enabling the discriminator of semi-supervised GAN to fairly identify every class of unbalanced dataset. The proposed balanced image recognition model BSGAN-GP consists of two components: the category balancing random selection (CBRS) algorithm and the discriminator for adding gradient penalty. For the brand-new CBRS algorithm, we randomly selected the label data in the real data by category so that the number of labels in each class in the input model is consistent, ensuring a balance between the real sample and the generator synthesis sample. Then, we conduct confrontation training, and the generator NetG with fixed parameters generates the same number of false sample input discriminators for each class. We then update the discriminator NetD to ensure that the discriminator can fairly judge all classes to improve the identification accuracy of the minority classes. BSGAN-GP adds an additional gradient penalty item in the discriminator loss function to stabilize the model training. The optimizer selected for the experiment was the Adam algorithm, with the learning rate set to 0.000 2 and the momentum set to (0.5, 0.9). The batch size for all three datasets was 100, where the MNIST and Fashion datasets were set to 1 000, or 100 per class and 5 000 for SVHN, or 500 per class. The experiment used an RTX 4090 GPU and 24 GB of memory. Most studies in the experiment were completed within 4 500 s. For MNIST and Fashion-MNIST, we trained 25 epochs, each of which took 85 s and 108 s, respectively, on our device. For the SVHN, we trained 30 epochs, with each epoch requiring 110 s on our device.ResultThe experiment is compared with six semi-supervised methods and three fully supervised parties in the three mainstream datasets. An unbalanced version of the three datasets is developed to prove the improved identification accuracy of a few classes. The experimental indicators include overall accuracy, category recognition rate, confusion matrix, and synthesized images. In the unbalanced Fashion-MNIST, compared with the semi-supervised GAN, the overall accuracy value increased by 3.281%, and the minority class recognition rate increased by 7.14%. In the unbalanced MNIST, the recognition rate of the corresponding four minority classes increased by 2.68% to 7.40% compared with the semi-supervised GAN. In the SVHN, the overall accuracy value increased by 3.515% compared with the semi-supervised GAN. Quality comparison of synthetic images was also conducted in three datasets to verify the effectiveness of the CBRS algorithm, and the improvement of synthetic images on the quantity and quality of a few classes proved its effect. Ablation experiments evaluate the importance of the proposed module CBRS versus the introduced module in the network. The CBRS module improved the overall accuracy of the model by 2% to 3%, and the GP module improved the overall accuracy of the model by 0.8% to 1.8%.ConclusionIn this study, we propose a new algorithm called CBRS to achieve fair recognition of all classes in unbalanced datasets. We introduced a gradient penalty into the discriminator of semi-supervised GANs for more stable training. Experiment results indicate that CBRS can achieve fairer image recognition and higher-quality synthesized image results.关键词:deep learning;semi-supervised learning(SSL);generative adversarial network(GAN);unbalanced image recognition;gradient punishment141|142|0更新时间:2025-04-11 - “在图像分类领域,FDPRNet通过特征重排列注意力机制和双池化残差结构,显著提升了分类准确率和模型泛化能力。”